Overview

Moreh develops software to enable various AI workloads – from pretraining to inference – to run efficiently on non-NVIDIA accelerators, with a particular focus on AMD GPUs.

vLLM is one of the most widely adopted inference engines for running LLM services in research, enterprise, and production environments. It is developed by a strong open-source community with contributions from both academia and industry, and provides broad support for various models, hardware, and optimization techniques. AMD is also contributing to the project to make vLLM run on AMD GPUs and the ROCm software stack. Nevertheless, most optimizations in vLLM still target NVIDIA GPUs, and the performance of AMD GPU hardware has yet to be fully utilized.

Moreh vLLM is our optimized version of vLLM, designed to deliver superior LLM inference performance on AMD GPUs. It supports the same models and features as the original vLLM, while maximizing computational performance on the AMD CDNA architecture. This is achieved through Moreh’s proprietary compute and communication libraries, along with model-level optimizations and vLLM engine-level modifications.

This technical report evaluates the inference performance of Meta’s Llama 3.3 70B model on Moreh vLLM. We conduct comprehensive testing across various input/output lengths and concurrency levels. Compared to the original vLLM, Moreh vLLM delivers an average of 1.68x higher throughput (total output tokens per second). Furthermore, it reduces latency metrics (time to first token and time per output token) by an average of 2.02x and 1.59x, respectively. In conclusion, adopting Moreh vLLM unlocks the full potential of AMD MI300 series GPUs, enabling them to serve as an efficient inference system.

AMD Instinct MI300X GPU

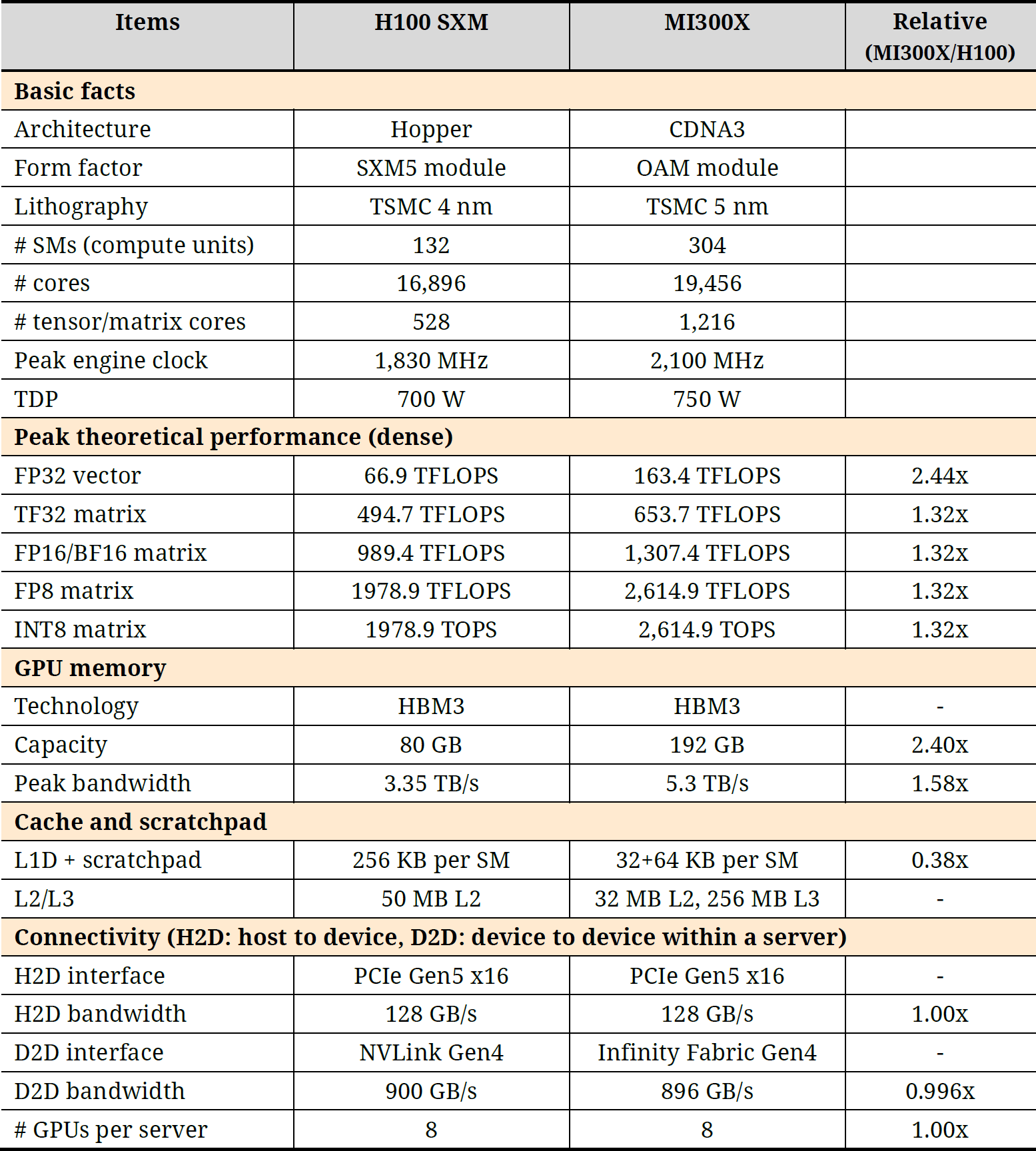

The AMD Instinct MI300X GPU presents a compelling alternative to NVIDIA’s H100. It provides 1.32x higher theoretical compute performance, 2.4x larger memory capacity, and 1.58x higher peak memory bandwidth compared to the H100. In particular, its significantly larger memory capacity and bandwidth are a major advantage for optimizing LLM inference. Table 1 compares the detailed hardware specifications.

AMD has also released the MI325X and MI355X as successors to the MI300X, which are direct competitors to NVIDIA’s H200 and B200 GPUs, respectively. Since these next-generation models are also based on the AMD CDNA3 architecture, all optimizations within Moreh vLLM will continue to apply seamlessly. We plan to publish performance evaluation results on the MI325X and MI355X in the near future and are always open to partners who can provide development and testing servers.

Experimental Setup

All experiments were conducted on an MI300X server configured as follows:

- Server: Lenovo ThinkSystem SR685a V3

- CPU: 2x AMD EPYC 9534 (128 cores in total, 2.45 GHz)

- GPU: 8x AMD Instinct MI300X OAM

- Main Memory: 2,304 GB (24x 96 GB)

- Operating System: Ubuntu 22.04.4 (Linux kernel 5.15.0-25-generic)

- ROCm Version: 6.8.5

We used the open-source vLLM 0.9.2 (tag v0.9.2 of https://github.com/ROCm/vllm) as a baseline for comparison. This was the latest versions available at the time of testing.

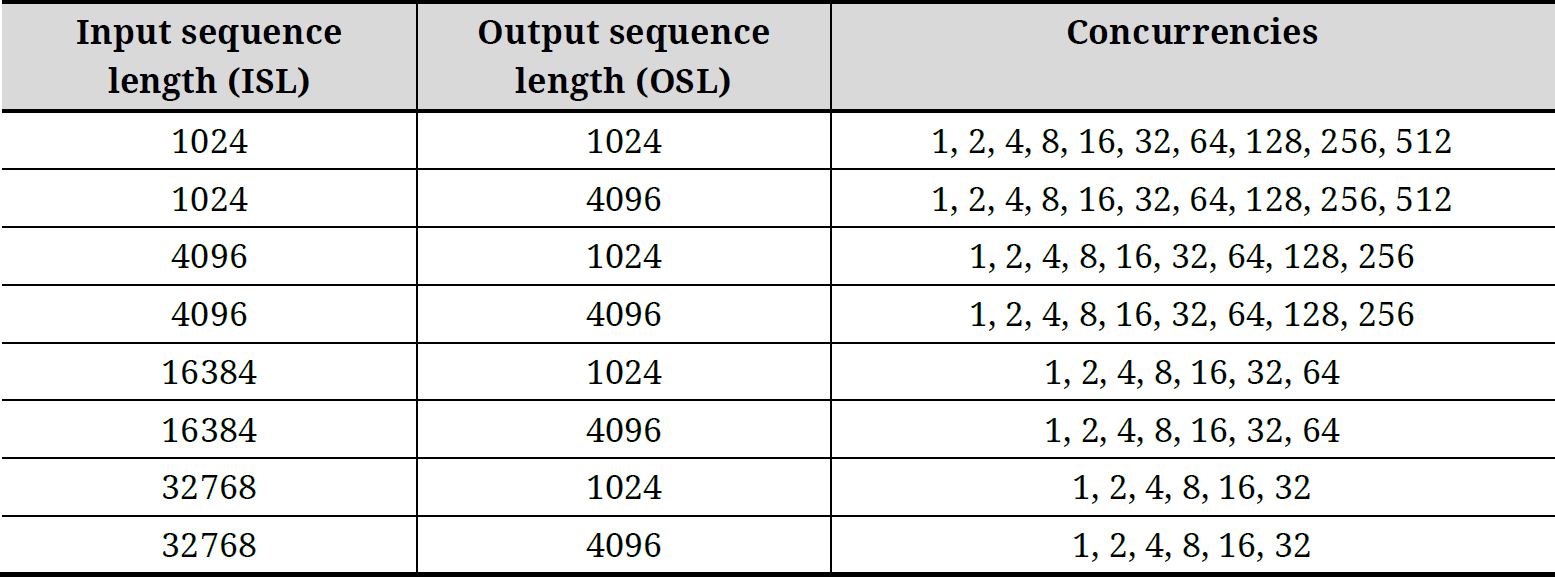

The Llama 3.3 70B model was executed in parallel across 2 GPUs of the server with a tensor parallelism (TP) of 2. Performance was measured using vLLM’s benchmark_serving tool. We chose 64 different combinations of input sequence length (ISL), output sequence length (OSL), and concurrency, as shown in Table 2.

The experimental setup was determined through discussions with one of our customers in Korea.

Output TPS, TTFT, and TPOT

Output tokens per second (TPS), time to first token (TTFT), and time per output token (TPOT) are three key metrics for evaluating the performance of LLM inference.

- Output tokens per second measures the overall throughput of the system, indicating how many tokens the model can generate in one second across all concurrent requests.

- Time to first token captures the initial latency – the time from when a request is sent until the very first token is produced.

- Time per output token indicates the average time taken to generate each subsequent token after the first one.

Output tokens per second is directly tied to service cost (dollar per token). The latter two metrics are important for user-perceived responsiveness. Together, measuring these three metrics provides a comprehensive view of inference performance, balancing cost and user experience.

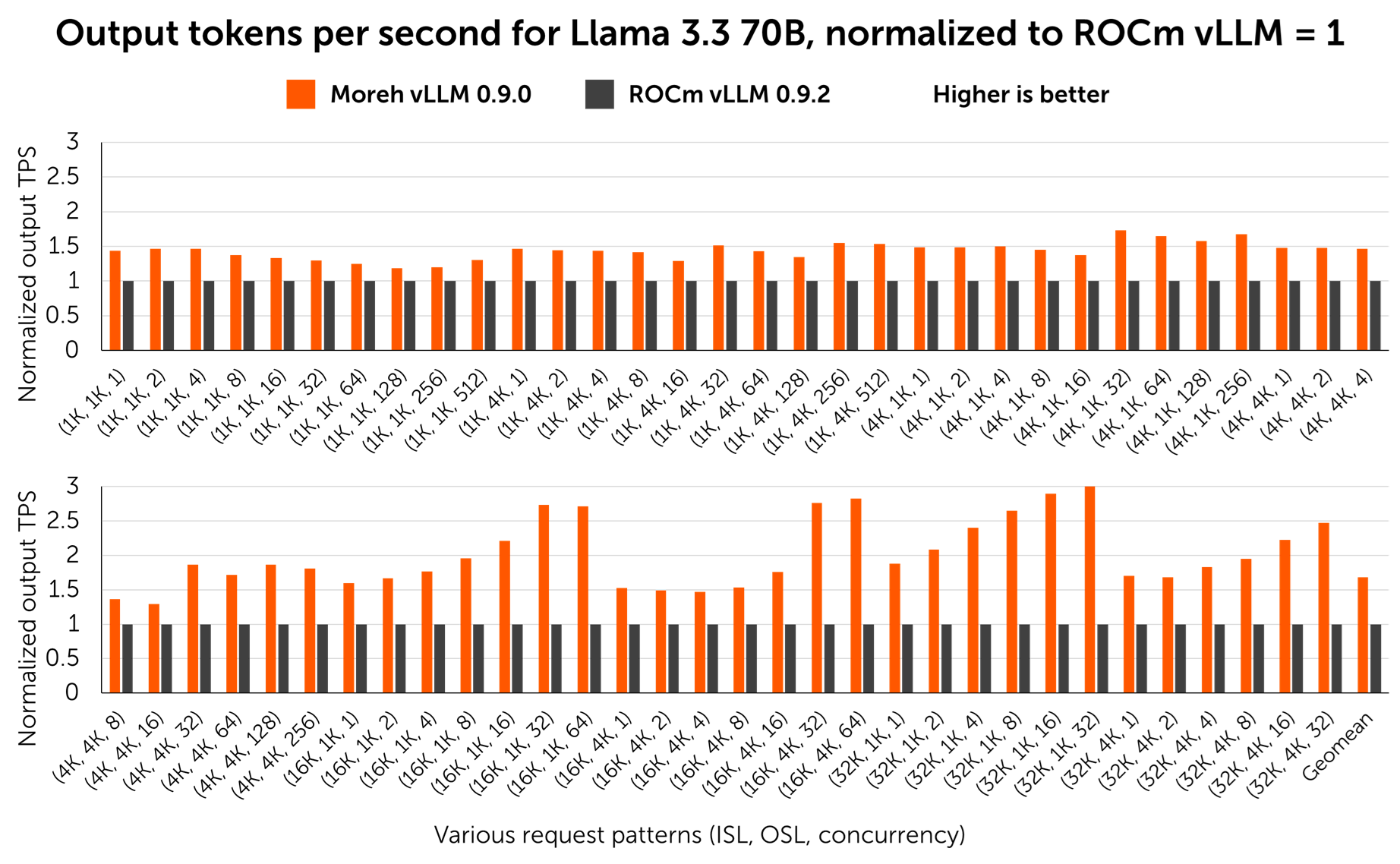

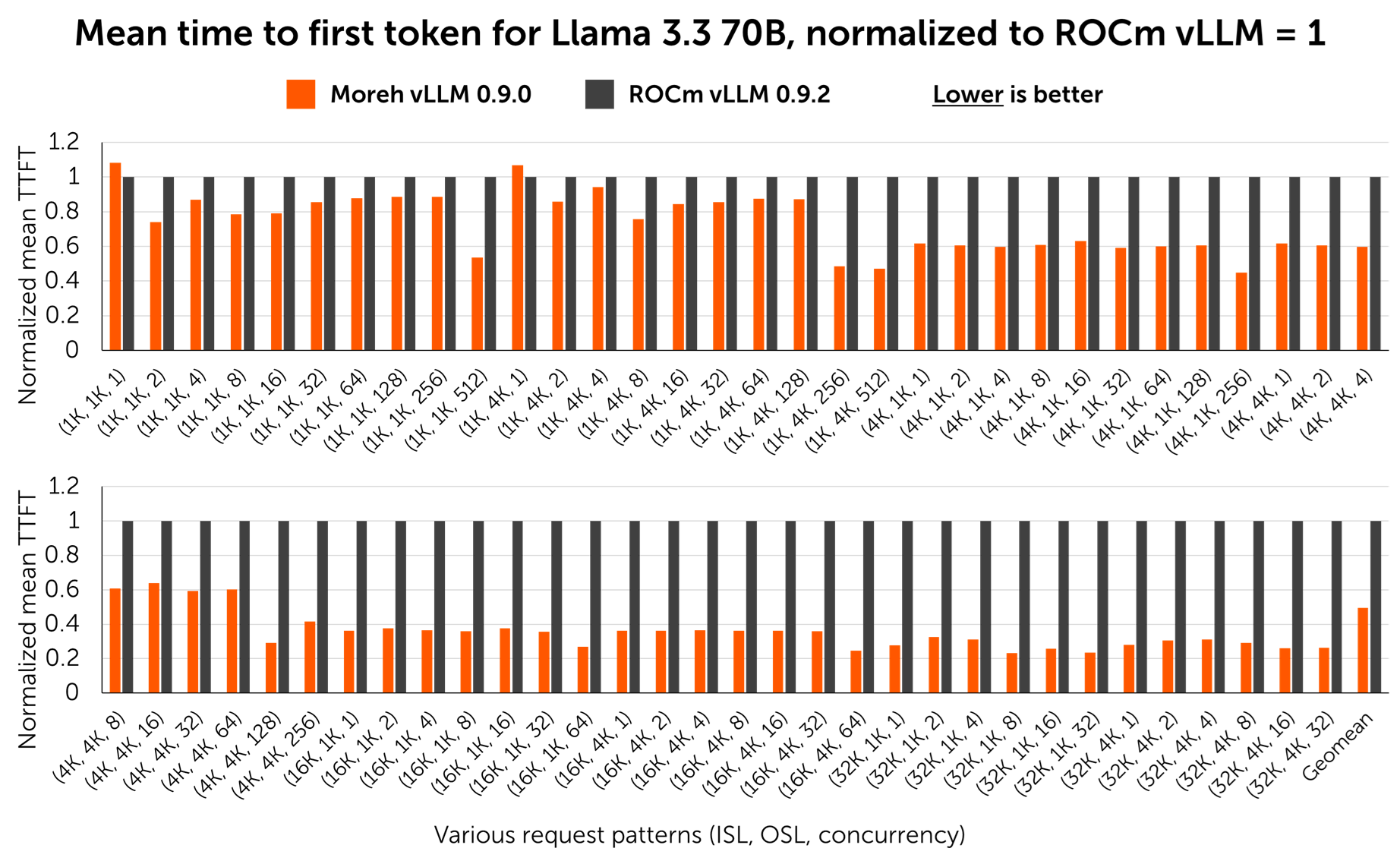

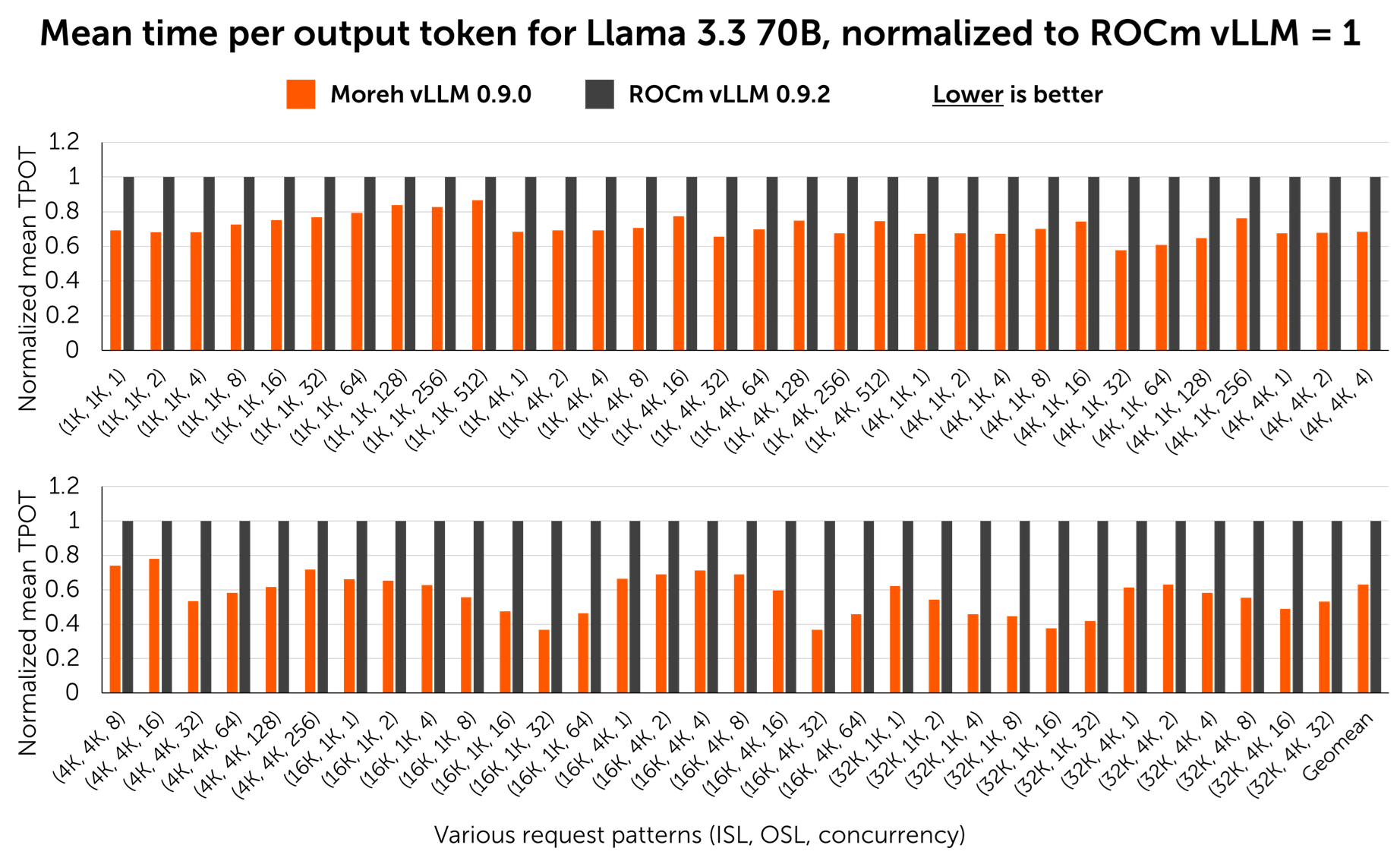

Figure 1 shows a graph comparing output tokens per second. Figure 2 and Figure 3 present graphs comparing the mean time to first token and the mean time per output token, respectively. The raw data can be found in the appendix. Moreh vLLM achieves 1.68x higher total output tokens per second, 2.02x lower time to first token, and 1.59x lower time per output token compared to the original vLLM. Especially, it can be seen that the time to first token for long input sequences is reduced by about 3-4x. This demonstrates that simply replacing the software with Moreh vLLM on the same AMD MI300 series GPU system can reduce costs while improving user experience.

Trade-Off Between Latency and Throughput

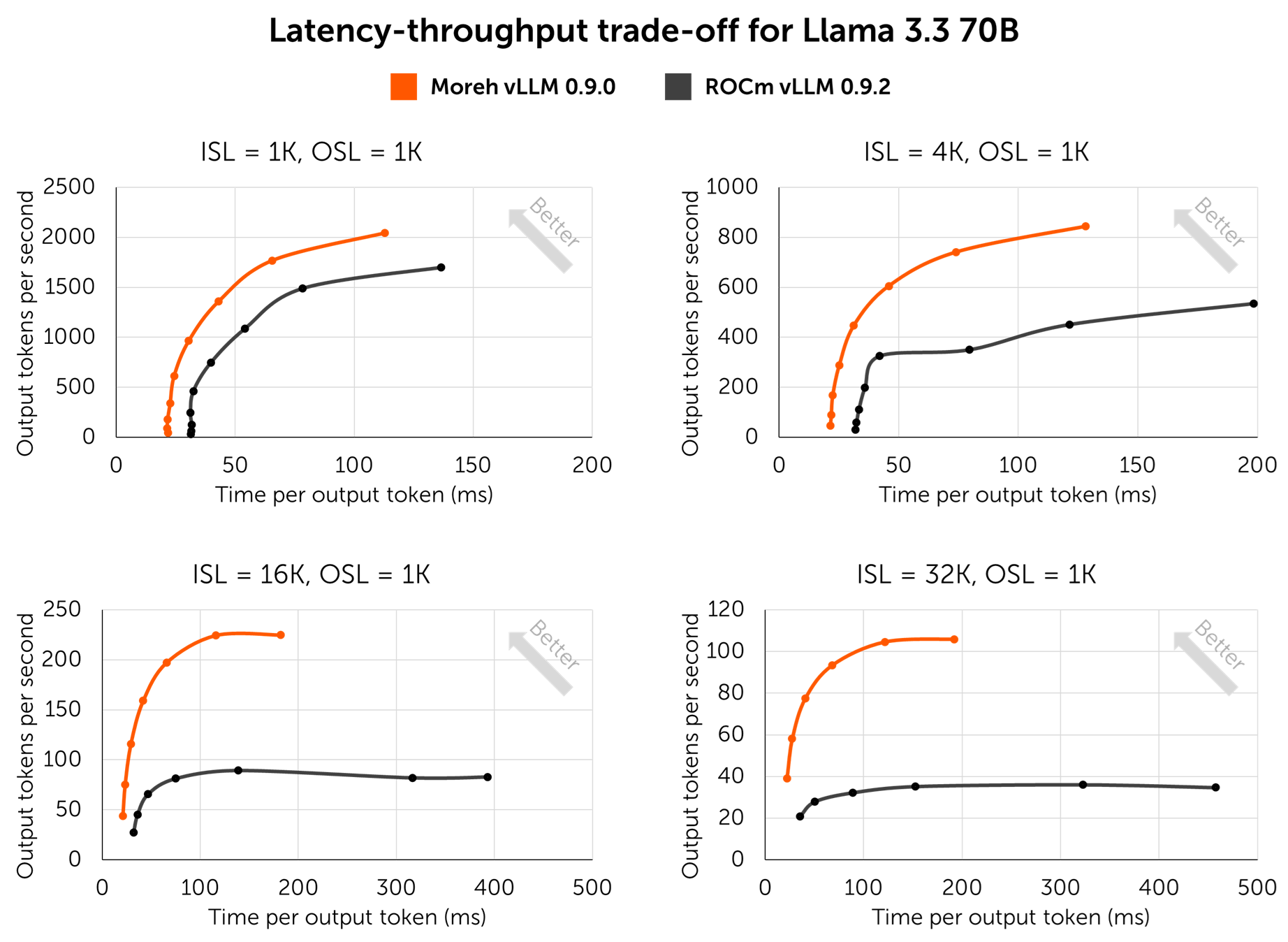

LLM inference involves an inherent trade-off between latency and throughput. Increasing the maximum concurrency of a vLLM instance improves throughput but also increases latency, while decreasing concurrency improves latency but lowers throughput.

Figure 4 illustrates these latency-throughput trade-off curves for the original vLLM and Moreh vLLM across various request patterns (input/output sequence lengths). Overall, the closer the graph shifts toward the upper left, the better the performance characteristics.

Conclusion

Moreh vLLM incorporates various techniques to optimize inference for the Llama 3.3 70B model, including proprietary GPU libraries, model-level optimizations, and modifications to the vLLM engine. As a result, Moreh vLLM delivers substantial performance improvements over the original open-source vLLM across various inference metrics. By adopting Moreh vLLM on AMD MI300 series GPU servers, LLM services can reduce costs while simultaneously improving latency. Moreh also provides a service that optimizes a customer’s proprietary AI model on AMD GPUs and delivers on-demand vLLM for it.

Appendix: Raw Data

(Please refer the PDF file.)