Moreh, a startup specializing in platform software for hyperscale AI clusters, has made significant strides in the field of artificial intelligence with its latest achievement. The company recently announced the successful completion of a monumental LLM (large language model) training project in collaboration with KT (formerly Korea Telecom), a leading cloud service provider in Korea. The model has 221 billion parameters, making it the largest Korean language model so far.

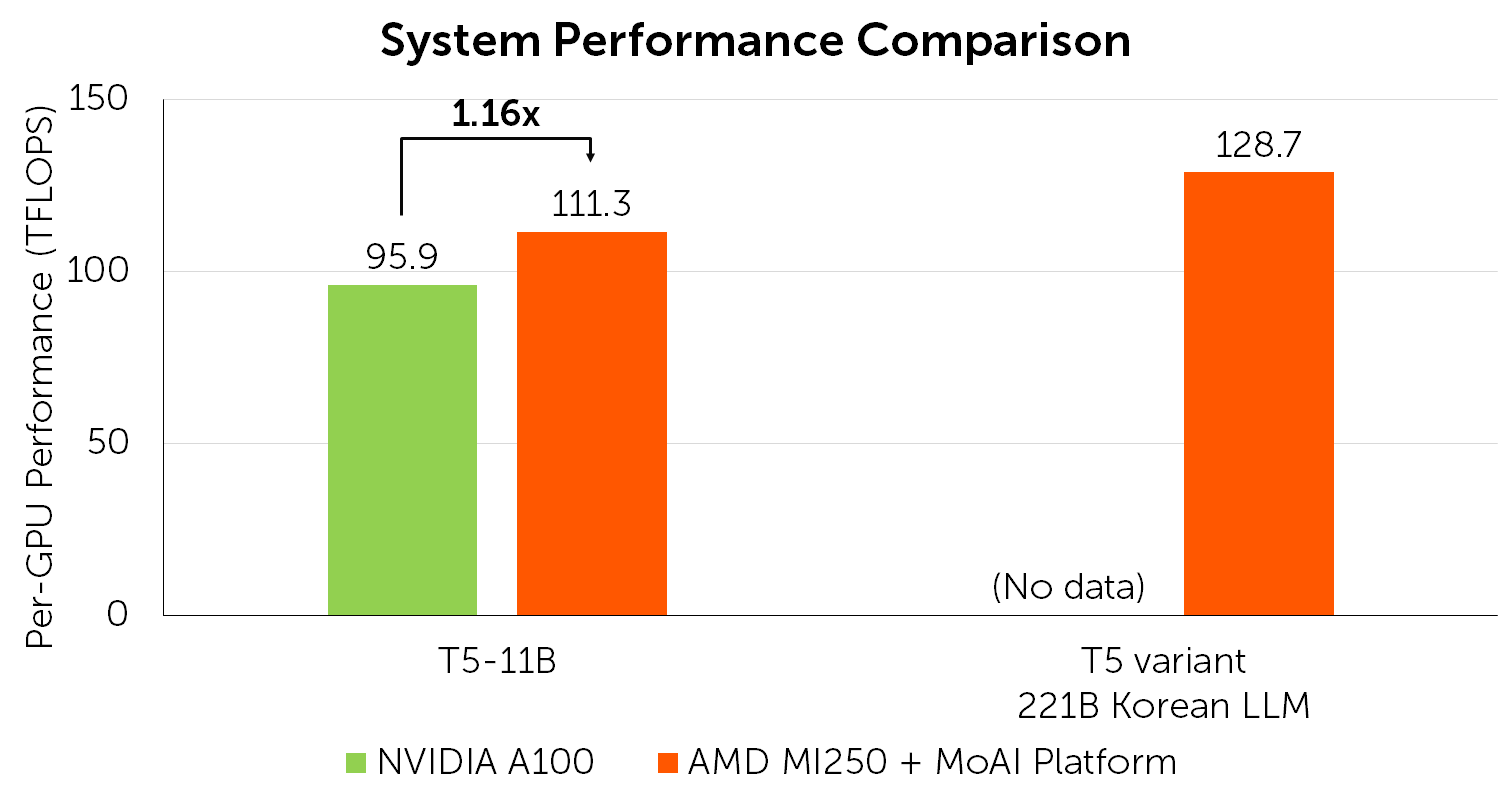

What sets this achievement apart is the groundbreaking approach taken by Moreh. Compared to other LLM developments heavily reliant on NVIDIA technology, Moreh’s endeavor was architected from the ground up using AMD GPU infrastructure. This is the first case to prove performance that surpass NVIDIA GPUs with AMD GPUs in AI model development, marking a pioneering milestone in the industry.

Moreh has engineered a comprehensive full-stack software platform that is not bound to a specific hardware vendor and can support various device backends, including AMD GPUs. This platform encompasses every aspect from primitive libraries to a run-time IR constructor and a unique AI compiler, demonstrating a level of completeness akin to, or even exceeding, NVIDIA CUDA. These efforts culminated to Moreh’s remarkable superiority over NVIDIA A100 based on AMD Instinct MI250 accelerators.

The MoAI platform, Moreh’s infrastructure software product, has already been integrated into commercial services by KT Cloud. It has powered various AI training and inference applications in production environments, including LLM-related tasks.

Moreh has proved differentiated value to customers, willing to build hyperscale AI infrastructure, particularly for cloud services, as well as for in-house LLM and multimodal model development. Customers can develop multi-billion to multi-trillion AI models with AMD GPUs at even higher performance. Furthermore, it eliminates the need for complicated manual parallelization and optimization for such a large AI model. This is empowered by the GPU virtualization technology of the MoAI platform that can be generally applied to any AI model and application. This positions Moreh as a game-changer in the field, revolutionizing the LLM development process by delivering enhanced capabilities at low cost.

In an exciting development, Moreh has revealed plans to refine the developed Korean LLM and release it as open source. The details of this pioneering endeavor can be found in the official whitepaper released by the company. As Moreh continues to push the boundaries of AI technology, its accomplishments are bound to shape the future landscape of AI research and development.

“As we are entering the hyperscale AI era, computing infrastructure, particularly the capability of infrastructure software directly affects the performance of AI models. This factor stands as both a pivotal competitive edge and a technological barrier for current AI market leaders. With the MoAI platform, Moreh performs as the true enabler of future AI,” remarked Gangwon Jo, Co-CEO of Moreh.

Moreh website: https://moreh.io