Authors: Jiyoung Park, Hankyu Jang, and Changseok Song

Overview

Reducing inference costs has become a critical priority for AI data centers and service providers as large language models (LLMs) continue to scale in size and complexity. The computational expense of serving these models at scale drives the need for efficient optimization techniques that can deliver substantial cost savings without compromising model quality.

A variety of approaches have emerged to address inference optimization, including disaggregation, KV cache-aware routing, test-time routing, quantization, speculative decoding, and many others. Among these techniques, speculative decoding has gained significant traction with major cloud service providers (CSPs) due to its unique advantages: it guarantees model quality preservation while remaining composable with other optimization methods, and delivers performance improvements in most practical scenarios.

Speculative decoding accelerates inference by using a small and fast model (referred to as the draft model) to generate draft tokens, which are then verified in parallel by the original model (referred to as the target model). This approach is fundamentally more efficient than sequential token generation with the target model alone.

The figure above illustrates the timeline for generating 10 tokens using speculative decoding with 4 draft tokens versus standard decoding. Even when only 2 draft tokens are accepted on average, speculative decoding achieves approximately 2× speedup.

This efficiency gain is possible because the time required for the target model to verify multiple tokens in parallel is nearly identical to the time needed to generate a single token. This occurs because LLM inference is primarily memory-bound rather than compute-bound—the bottleneck lies in loading model weights from memory, not in the actual computation. Whether verifying one token or multiple tokens simultaneously, the target model must load the same weights, resulting in similar latency. By amortizing this memory access cost across multiple token verifications, speculative decoding significantly reduces the total number of expensive target model forward passes required to generate a sequence.

However, conventional speculative decoding approaches face a key limitation: draft models are typically pre-trained on general workloads that may not align with the distribution of real production traffic. Since workload distributions vary significantly across different services and evolve over time even within the same service, a draft model trained on generic data often delivers suboptimal performance for specialized or shifting use cases.

Temporal Incremental Draft Engine (TIDE) for Self-Improving LLM Inference

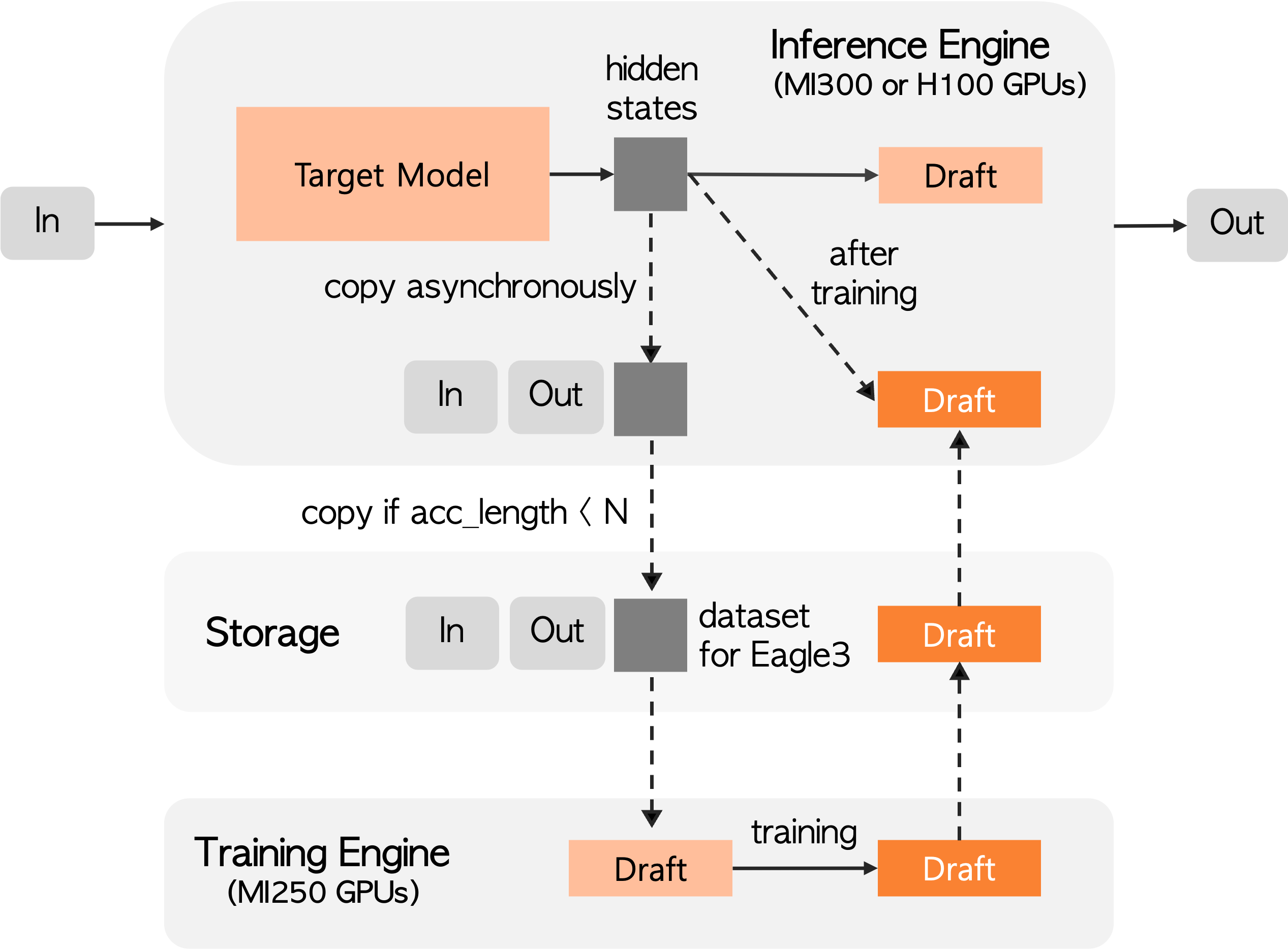

TIDE addresses this challenge through runtime draft model training. TIDE leverages the EAGLE3 speculative decoding technique with SGLang as the inference engine and SpecForge for draft model training. By continuously adapting the draft model based on live service workloads, TIDE automatically improves inference performance without manual intervention.

EAGLE3: Training Draft Models from Hidden States

EAGLE3 takes a unique approach to draft model training. Unlike traditional draft models that function as standalone language models, EAGLE3’s draft model takes the hidden states from several intermediate layers of the target model as input and learns to predict the target model’s output distribution.

TIDE System Design

TIDE’s architecture is designed to seamlessly integrate runtime training into a production inference system while minimizing overhead and complexity.

1. Inference Engine with Hidden State Logging

The inference engine (SGLang) performs standard prefill and decoding computations to serve user requests. Critically, during these operations, it captures and dumps the hidden states from the target model’s intermediate layers to storage. These hidden states represent the internal representations computed during actual production inference and serve as training data for the draft model.

2. Training, and Model Update

As the inference engine continues serving requests, hidden states accumulate in storage. Once a sufficient amount of data has been collected—representing a meaningful sample of the current workload distribution—the training process is triggered automatically. training engine then loads the accumulated hidden states and trains the EAGLE3 draft model to better predict the target model’s output distribution based on the recent workload. After training converges, the updated draft model is deployed back to the inference engine, replacing the previous version. This completes one adaptation cycle, and the process continues as new hidden states accumulate, ensuring continuous adaptation to evolving workload patterns.

This architecture elegantly addresses the key challenges of runtime training:

- Near-zero inference overhead: Hidden state logging runs asynchronously with inference computations, allowing the overhead to be almost completely hidden and adding virtually no latency to request serving.

- Asynchronous training: Training happens independently of inference, so it doesn’t block or slow down request serving.

- Automatic adaptation: The entire cycle runs autonomously without manual intervention, continuously adapting to workload changes.

- Resource efficiency: In heterogeneous GPU systems, training can be offloaded to different hardware while high-performance GPUs focus on inference.

The architecture’s simplicity and automation make it practical for production deployment, where manual tuning and intervention are costly and impractical.

Evaluation

To evaluate the effectiveness of runtime draft model training, we compared the performance of TIDE against a static draft model pre-trained on general data. We used the dbdu/ShareGPT-74k-ko dataset for evaluation, which represents Korean conversational queries and provides a realistic testbed for workload-specific adaptation. Our experiments used lmsys/gpt-oss-120b-bf16 as the target model with lmsys/EAGLE3-gpt-oss-120b-bf16 as the baseline pretrained draft model, with inference running on AMD MI300X or NVIDIA H100 GPUs and draft model training conducted on AMD MI250 GPUs, demonstrating TIDE’s ability to leverage heterogeneous GPU resources effectively.

As shown in Figure 3, TIDE achieves output token throughput speedup improvements ranging from 1.14× to 1.35× over the pretrained draft model, depending on the concurrency level.

Figure 4 illustrates a critical advantage of runtime training: throughput improves as TIDE continues to learn from the workload. The pretrained draft model maintains relatively constant throughput throughout the experiment, as its performance is fixed by its pre-training. In contrast, TIDE shows progressive improvement in throughput as it adapts to the specific patterns in the Korean conversational dataset.

To better understand the mechanism behind TIDE’s performance improvements, we analyzed how acceptance length—the number of draft tokens accepted by the target model in each verification step—evolves over time at different concurrency levels.

Figure 5 reveals an important characteristic of runtime training: higher concurrency levels lead to faster improvement in acceptance length. This occurs because:

- Faster data accumulation: At higher concurrency, more requests are processed simultaneously, allowing TIDE to collect training data at a faster rate. This accelerates the learning process and enables quicker adaptation to the workload distribution.

- More frequent model updates: With more training samples available per unit time, the draft model can be updated more frequently with statistically significant batches, leading to faster convergence and broader pattern coverage.

Conclusion

TIDE demonstrates that runtime draft model training can significantly improve speculative decoding performance in production environments. Our experiments on the Korean conversational dataset show 1.14× to 1.35× output token throughput speedup over static pre-trained draft models, with performance continuously improving as the system adapts to live workloads.

Beyond performance gains, TIDE offers compelling advantages in heterogeneous GPU systems. By leveraging idle or training-optimized resources — such as older GPU generations or underutilized hardware — for draft model training while reserving high-performance GPUs for inference, TIDE improves both resource utilization and inference efficiency simultaneously. This results in better overall cost-performance at the system level.

To seamlessly integrate runtime training into production inference systems, we have contributed to open-source projects, making TIDE’s capabilities accessible to the broader community:

As AI workloads continue to diversify and evolve, systems like TIDE that automatically adapt to changing patterns will become increasingly essential for maintaining efficient and cost-effective inference at scale.