Authors: Jiyoung Park, Seungman Han, Jangwoong Kim, Jungwook Kim, and Kyungrok Kim

Overview

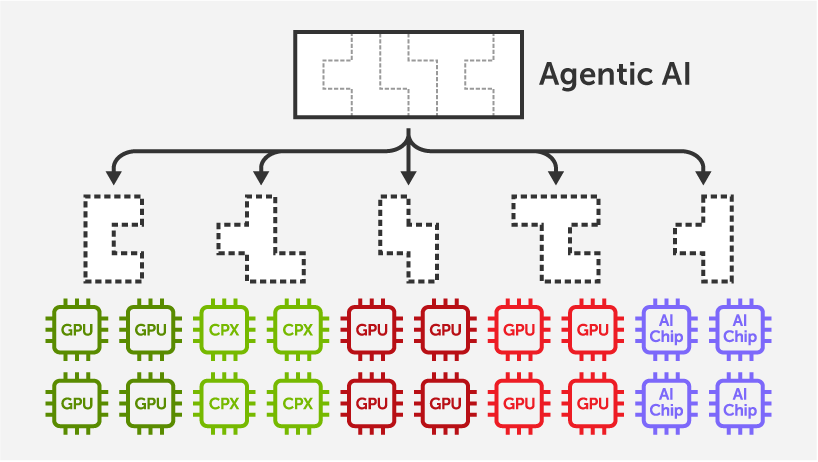

As agentic workflows become increasingly prevalent in AI applications, models must handle dramatically longer context lengths. This shift presents a critical challenge: maintaining service-level objectives (SLOs) while maximizing system utilization. In particular, optimizing prefill performance is crucial for reducing Time-To-First-Token (TTFT) for long context requests. To address this, we developed SLO-Driven Prefill Engine (SLOPE).

SLOPE is a dedicated prefill engine that applies context parallelism techniques (Ulysses + Ring Attention) across multi-node GPU clusters for SLO-driven optimization of long-context inputs. Existing LLM engines are fundamentally limited in reducing TTFT below a certain threshold when handling long input prompts, as prefill computation is confined to a single node. SLOPE overcomes this limitation by parallelizing prefill computation across multiple nodes, driving TTFT well below the target SLO threshold while maximizing the number of concurrent user requests.

SLOPE can be integrated with existing LLM engines in a prefill/decode disaggregation mode, where it operates as a dedicated prefill worker.

Introducing Context Parallelism

Tensor parallelism (TP) is the most widely used parallelization technique in LLM inference. TP distributes linear layer parameters across devices, which splits attention heads across devices and requires AllReduce communication. However, simply increasing TP size to reduce TTFT has limitations:

- Inefficient GEMM operations: Excessive parameter partitioning leads to inefficient GEMM operations.

- Redundant computation: When the number of attention heads is smaller than TP size, redundant computation occurs.

- Communication overhead: As TP size increases, inter-node AllReduce becomes necessary, significantly increasing communication overhead.

Context parallelism partitions the input prompt along the sequence dimension. Particularly for long context scenarios, context parallelism is more efficient than tensor parallelism due to the massive parallelism available in the sequence dimension. Most operations, except for the attention layer, have no inter-token dependencies and can be processed completely independently. The inter-token dependencies in the attention layer can be addressed through two approaches:

- Ulysses: performs all-to-all communication on query, key, and value before attention computation, partitioning them along the head dimension to enable independent computation.

- Ring Attention: performs attention computation with partial query, key, and value on each device while simultaneously sending/receiving key and value to/from neighboring devices.

These two approaches have complementary trade-offs. Applying Ulysses is limited when using Grouped Query Attention (GQA) or Multi-Head Latent Attention (MLA) due to the reduced number of key and value heads. On the other hand, Ring Attention introduces higher communication volume than Ulysses. However, with larger sequence lengths, attention computation becomes proportionally more dominant, allowing the communication to be hidden by attention computation. As a result, they should be appropriately combined based on context length and SLO requirements.

When context parallelism is applied, all devices hold duplicate model parameters, so memory usage increases compared to TP. We address this by applying pipeline parallelism alongside context parallelism.

Evaluation

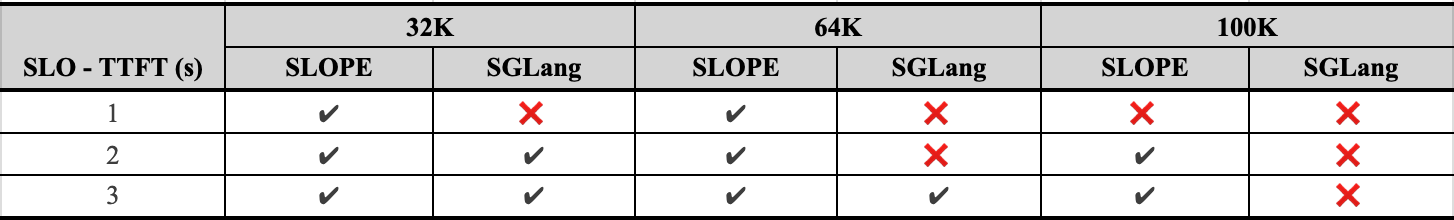

We evaluate SLOPE’s prefill performance across different parallelization configurations combining Ulysses, Ring Attention, and Pipeline Parallel in various combinations, and compare it against SGLang with TP=8 on a 4-node AMD MI250 cluster (8 devices per node). For all experiments, we use the openai/gpt-oss-120b model to evaluate prefill performance, and test with three context lengths: 32K, 64K, and 100K.

As shown in the table below, SLOPE satisfies very low TTFT SLOs across all context lengths, while SGLang’s ability to meet these requirements varies.

Figure 1 shows TTFT and throughput measurements across various parallelization configurations and concurrency levels. The results confirm that SLOPE achieves very low latency SLOs of under 1 second TTFT for both 32K and 64K contexts. Compared to SGLang with TP=8, SLOPE achieves higher throughput at the same TTFT, meaning it can handle more concurrent user requests while meeting SLO requirements. Even at 100K context length, SLOPE achieves TTFT under 2 seconds, while SGLang with TP=8 is limited to approximately 9 seconds. This shows that SLOPE provides a practical solution for long-context LLM inference.

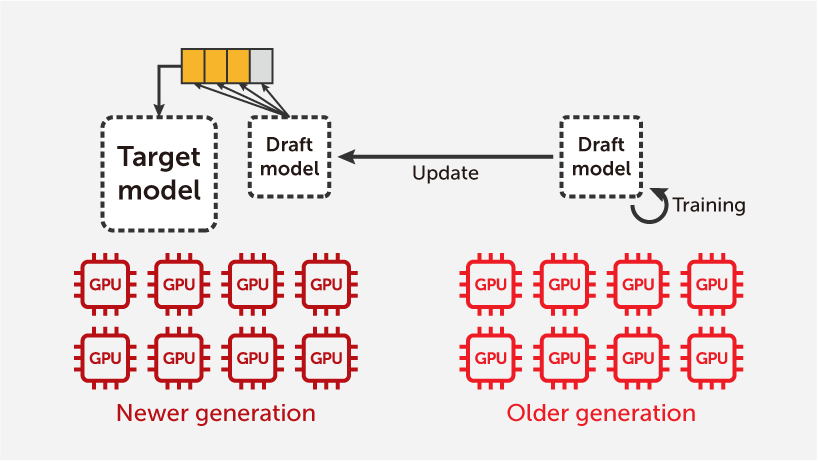

SLOPE Engine in Heterogeneous Clusters

The use of the SLOPE engine goes beyond merely reducing response latency and increasing token throughput for long-context inference. It also provides a way to efficiently utilize legacy GPU servers that already exist in data centers.

In general, LLM inference is difficult to parallelize across multiple nodes, which is one of the reasons why increasingly faster, higher-capacity, and more expensive GPUs continue to be introduced to handle large-scale LLMs. However, with the SLOPE engine, at least in the prefill stage, multiple older GPU servers can be aggregated to achieve sufficiently low latency and high throughput.

To demonstrate that this type of utilization is feasible, we evaluated SLOPE on an AMD MI250 GPU cluster. In a system where AMD MI250 GPUs (comparable to NVIDIA A100) and newer MI300-series GPUs coexist, the MI250 GPUs can be allocated to SLOPE, while the MI300-series GPUs perform decoding using a conventional inference engine, enabling higher overall system efficiency. These capabilities will be supported through the MoAI Inference Framework from Q1 2026.