In today’s rapidly evolving landscape of artificial intelligence, the need for robust, language-specific models is more critical than ever. Moreh is excited to announce the release of Motif, a high-performance Korean language model (LLM), which will be made available as an open-source model. This release underscores our commitment to contributing to the growth and nurturing of the Korean AI ecosystem.

Key Points

- Moreh is releasing Motif, a high-performance Korean LLM, as open-source. While the development of Motif required significant resources, we are making it publicly available in the hope of further stimulating growth in the AI industry.

- Newly released Motif surpasses the Korean language performance of existing top LLMs. Motif has achieved exceptional results in the KMMLU benchmark.

- Motif is already live as a chatbot service and is freely accessible to everyone via models on Hugging Face and scripts on GitHub. Everyone can explore and utilize its capabilities without any barriers.

The Journey to Motif

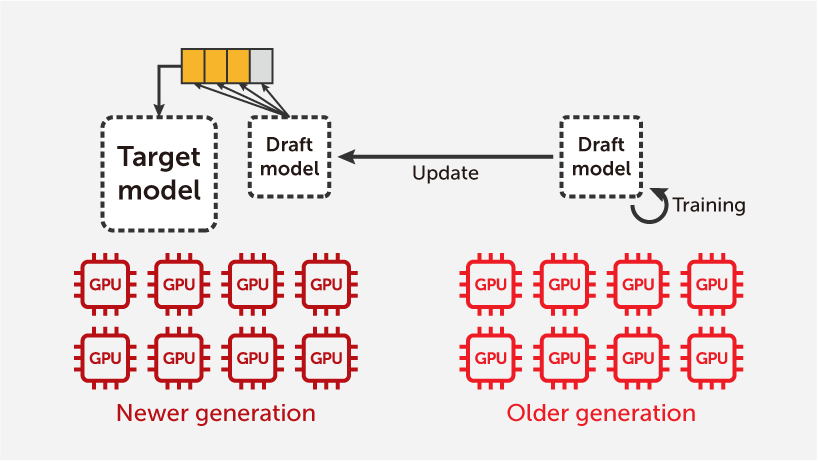

The development of a large language model like Motif requires extensive resources and cutting-edge technology. Our focus has been on enhancing the capabilities of the Korean language, allowing Motif to excel in this area while maintaining strong performance in English. Built on the Llama 3 70B pretrained model, Motif utilizes sophisticated training methodologies such as LlamaPro for deepening the model and Masked Structure Growth (MSG) for width expansion. This approach allows us to scale the model effectively while preserving the integrity of its pretrained weights.

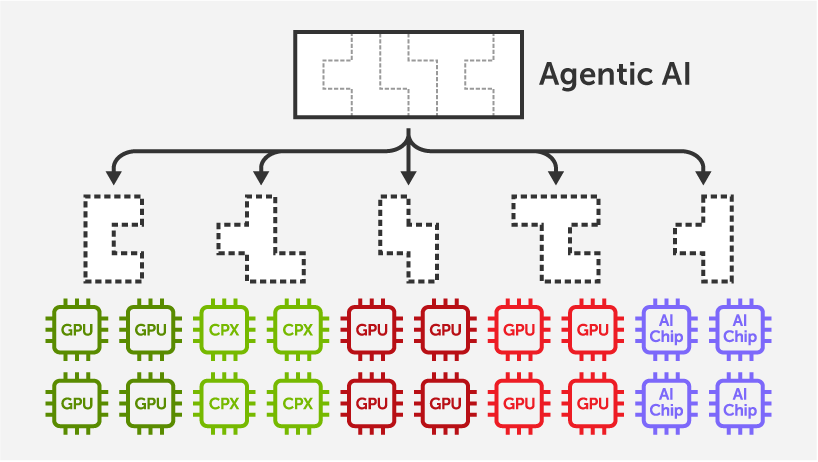

An essential component of Motif’s development is the utilization of the MoAI Platform, a state-of-the-art AI infrastructure optimized for training large-scale models. This platform efficiently manages thousands of GPUs in a cluster, boasting features like automatic parallelization, GPU virtualization, and dynamic GPU allocation. Such capabilities significantly enhance our ability to experiment, optimize, and fine-tune Motif, allowing us to focus on architectural improvements and performance enhancements.

A Commitment to the AI Ecosystem

Releasing Motif as an open-source model is a deliberate step by Moreh to contribute meaningfully to the Korean AI ecosystem. We understand that the landscape of AI innovation thrives on collaboration and shared resources. By making Motif publicly accessible, we are empowering developers, researchers, and businesses throughout Korea to leverage this advanced tool for their applications. This open-source initiative not only supports individual experimentation but also invites collective efforts towards creating specialized solutions that serve our local market’s needs. We envision that many Korean companies will collaborate to maximize the potential of Motif, thereby shaping the future of AI. Our hope is to stimulate growth, drive innovation, and encourage a cooperative spirit within the AI community.

Unprecedented Performance

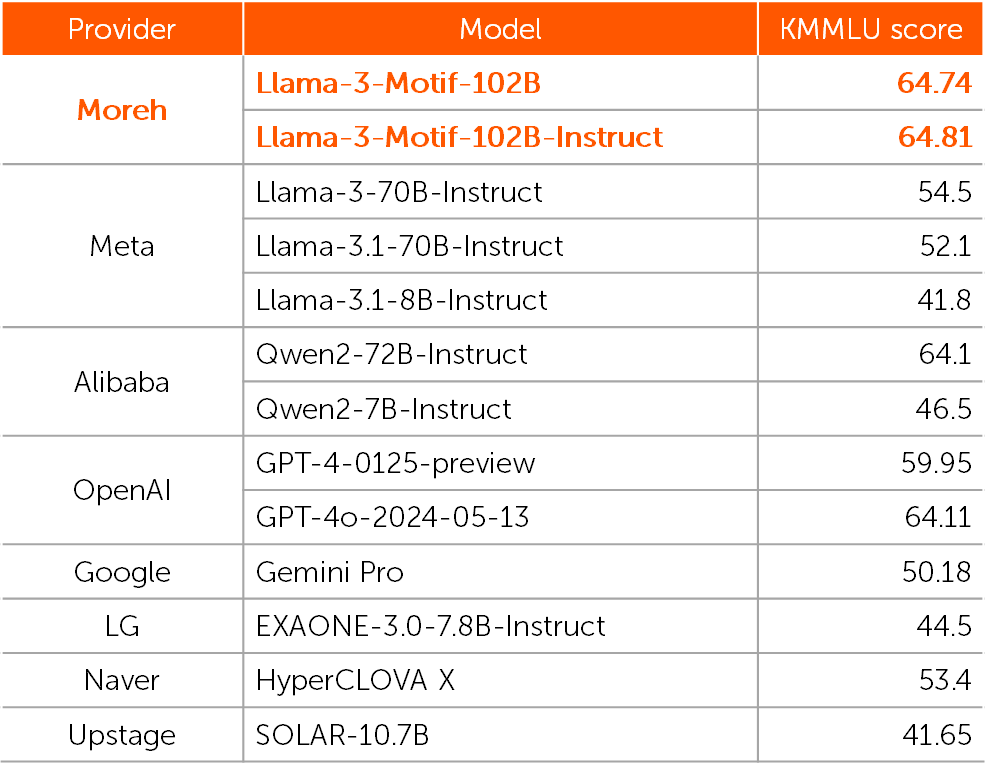

We are proud to announce that the newly released Motif surpasses the Korean language performance of existing top LLMs. With 102 billion parameters, Motif has achieved exceptional results in the KMMLU benchmark, which evaluates AI performance specific to the Korean language. Motif scored 64.74, exceeding the performance of OpenAI’s GPT-4, a model recognized among global tech giants for its capabilities. This achievement not only demonstrates Motif’s superiority over other models like Meta’s and Google’s LLMs but also confirms its stand-out processing capabilities in understanding and generating Korean language.

The Korean Multi-task Language Understanding (KMMLU) is a comprehensive benchmark for assessing the knowledge acquisition of language models, specifically those trained on Korean data. It includes 35,030 questions across 45 subjects, sourced from various Korean standardized tests such as the Public Service Aptitude Test (PSAT), professional certification exams, and the College Scholastic Ability Test (CSAT). The questions cover a wide range of difficulty, from high school to expert level, offering a detailed evaluation of model performance. Our evaluation was conducted using a 5-shot approach, with the results shown in the table below.

Conclusion

As we launch Motif, we are filled with excitement about the future that lies ahead. This initiative not only demonstrates Moreh’s technological achievements but also highlights our dedication to fostering an open and supportive AI ecosystem. It also represents a foundational step towards strengthening Korea’s sovereign AI capabilities. Together, we can harness the capabilities of Motif to drive innovation and propel growth in our industries. Join us in exploring the vast potential of this high-performance LLM, and let’s collectively shape the future of AI for Korea!

- Models on Hugging Face

- Scripts on GitHub

- Live chatbot service @ AI model hub

- Contact Moreh (contact@moreh.io) for getting or building fine-tuning and serving infrastructure of Motif.