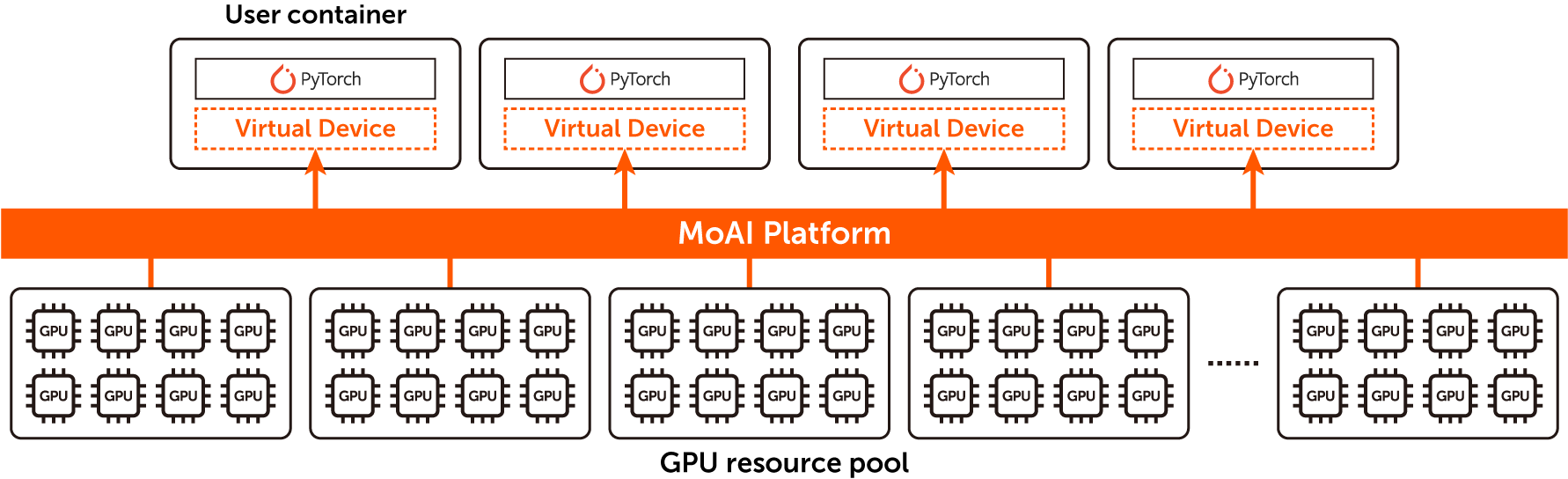

MoAI is a software platform for large-scale AI infrastructure including even non-NVIDIA chips (especially AMD GPUs). It provides PyTorch compatibility as an alternative to CUDA, and also provides an easy-to-use environment for dealing with LLMs and up to thousands of GPUs. The core technology of the MoAI platform is the GPU virtualization. Each user is provided with a virtual device named a MoAI accelerator. They can run any PyTorch program on the virtual device as if it was a physical NVIDIA GPU (i.e., cuda:0 device).

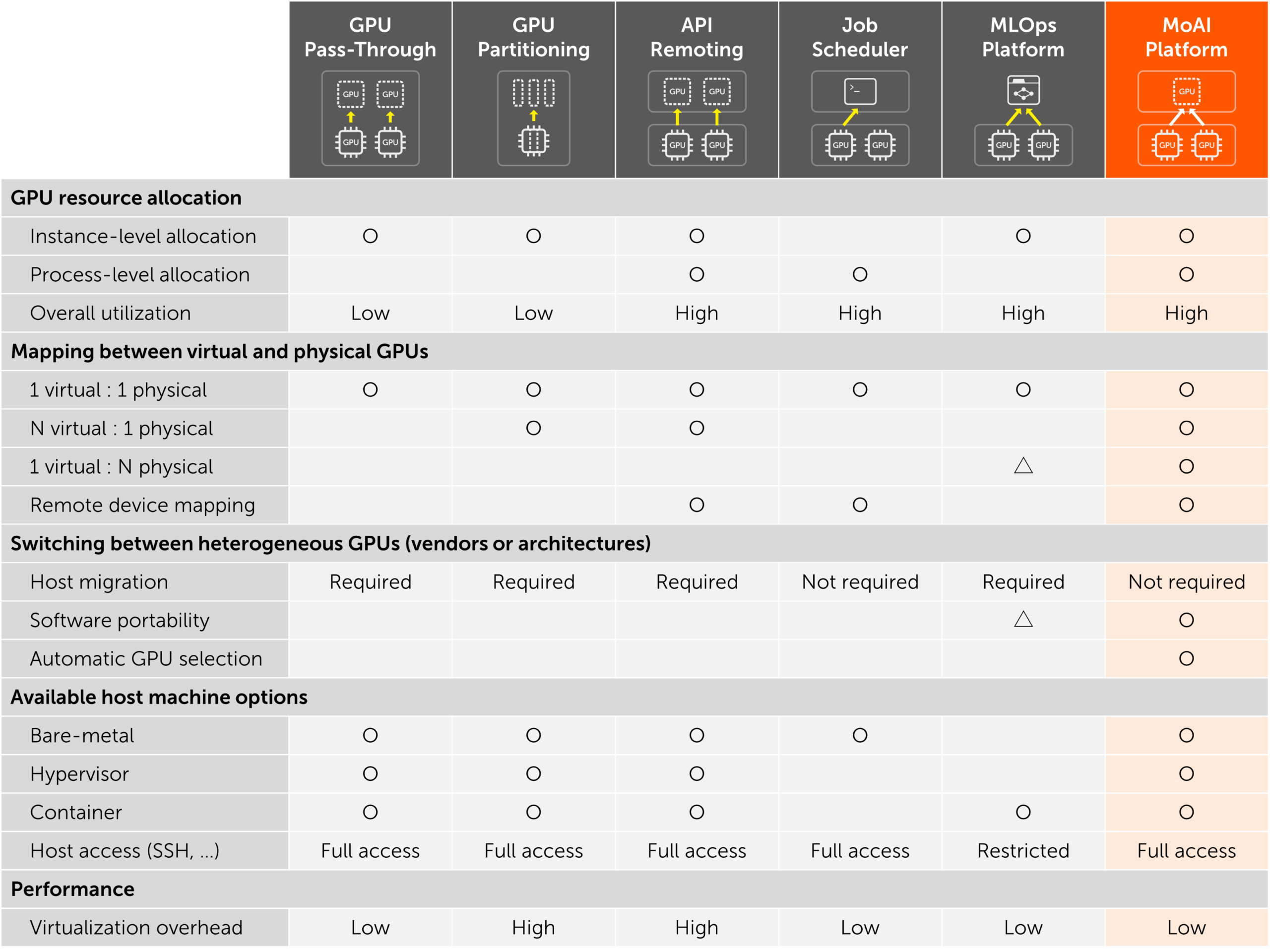

While various solutions offer different types of GPU virtualization and/or resource allocation, MoAI provides comprehensive and uniquely differentiated features based on native virtual device support at the PyTorch level.

Fine-grained and Efficient Resource Allocation

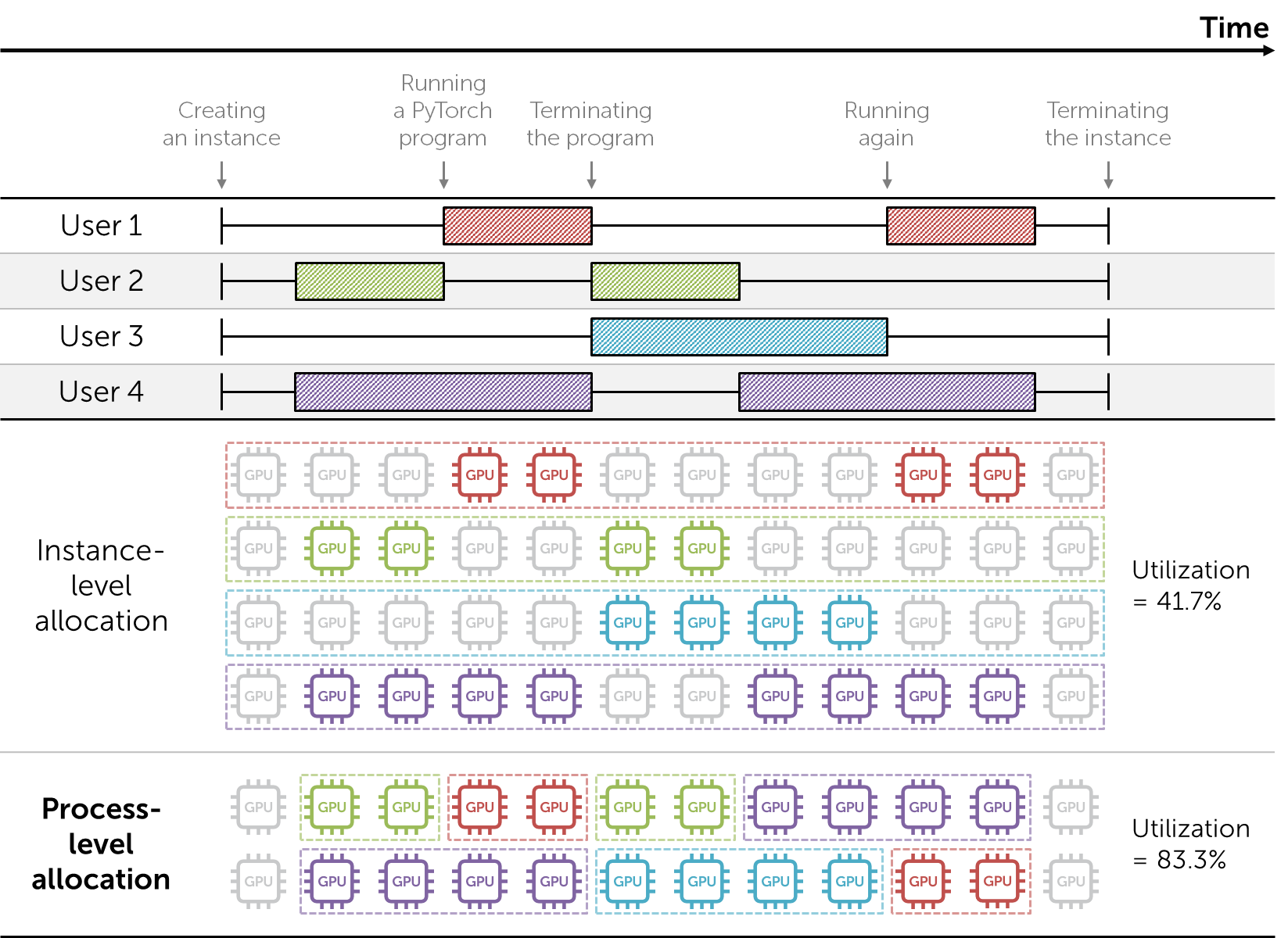

A common method for sharing GPU infrastructure among multiple users is to allocate one or more GPUs exclusively to each user’s instance (virtual machine or container), and to retain this allocation until the instance is terminated. In other words, users are assigned dedicated GPU resources while they have access to the system. In many cases, however, the actual time spent executing computational tasks on the GPU is only a fraction of the time the instance is alive.

On the MoAI platform, all the GPU resources are solely managed in MoAI’s resource pool and are not directly visible to the users. When a user instance is first created, no physical GPUs are assigned, and the user only sees a virtual device. When the user actually runs a PyTorch program, idle GPUs from the resource pool are automatically allocated. And once the PyTorch program ends, the GPUs are immediately released and can be reused for other users or tasks. This can largely improve the overall utilization of GPU infrastructure.

Traditional HPC job schedulers such as Slurm can also be used for process-level resource allocation. However, they basically assume a shared file system and SSH accessibility across all users and all GPU servers. Thus, such software is suitable for configuring internal or research-purpose infrastructure but is inadequate for commercial cloud services.

Scaling to Multiple GPUs

Existing GPU virtualization solutions have focused on controlling individual GPUs, or further dividing GPUs into smaller units (e.g., NVIDIA’s Multi-Instance GPU). Now the emergence of LLMs has created a demand for using a large number of GPUs together, rather than splitting them.

The most crucial strength of MoAI is seamless multi-GPU scaling. A single virtual device can correspond to multiple physical GPUs. Users can abstract from a few GPUs to thousands into a single PyTorch device, and easily implement any multi-billion or multi-trillion parameter models and training scripts without concerning manual parallelization. This is made possible by MoAI’s integrated and tightly coupled software full stack from PyTorch to GPUs, particularly through its novel full-trace compiler technology. As a result, MoAI makes LLM training extremely easy!

A virtual device can, of course, aggregate GPUs from different servers connected by InfiniBand or RoCE. Users can easily change the scale of GPU resources (e.g., the number of GPUs) whenever they run a PyTorch program. This can be done with a simple CLI command, without needing to restart or migrate virtual machines or containers.

Our blog post presents a case study of using 1,200 AMD MI250 GPUs together through a single virtual device for 221B LLM pre-training.

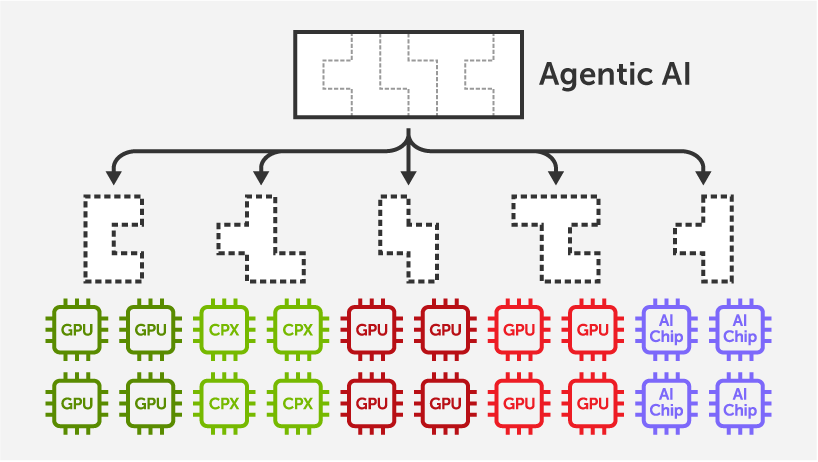

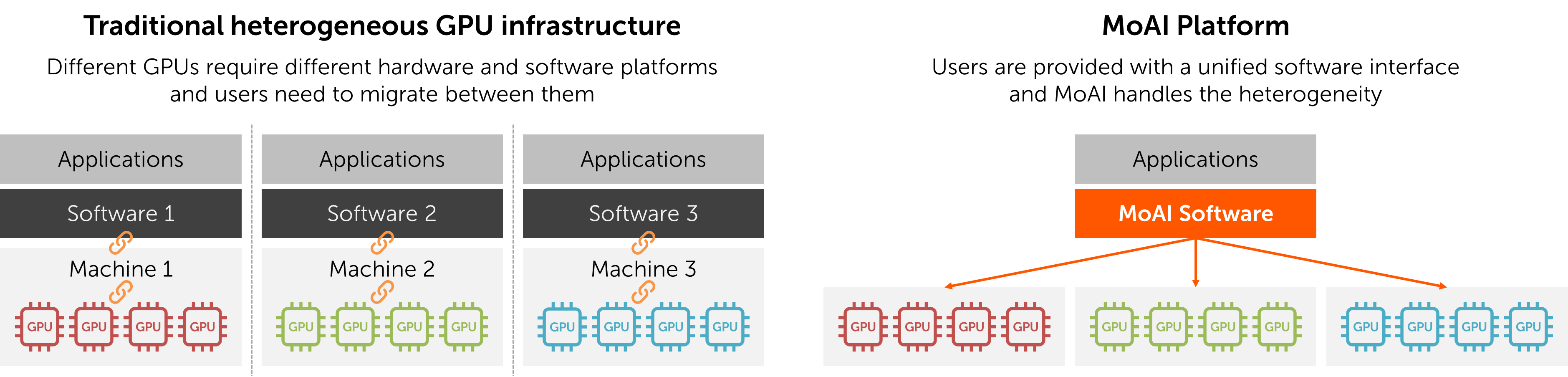

Support for Heterogeneous GPUs

The GPU resource pool of MoAI can include various types of GPUs encompassing different hardware vendors, architectures, and/or models. Users do not need to worry about the specific GPUs assigned to their virtual devices, as there is no need to change machines, software, or configurations when using different types of GPUs.

Infrastructure administrators (e.g., cloud service providers) can customize resource allocation policies for assigning heterogeneous GPUs to users based on their service models or requirements. This significantly enhances the flexibility of AI infrastructure configuration. For example, it allows for the gradual expansion of infrastructure by continuously introducing new GPUs, and it enables a multi-vendor strategy.

MoAI currently supports a variety of AMD Instinct GPUs, including MI250 and MI300X. We plan to expand our support to include a wider range of devices such as NVIDIA GPUs and AI accelerators.

Conclusion

MoAI not only makes GPU infrastructure easily accessible to users at various scales, but it also enhances the efficiency, versatility, and flexibility of the infrastructure through its advanced virtualization technology. For insights into Korea Telecom’s adoption of cloud infrastructure, check out our other blog post. If you’re interested in adopting MoAI or have further questions, feel free to reach out to us at contact@moreh.io.