Reducing inference costs has now become one of the core challenges for all AI data centers and service companies. A wide range of techniques are being devised at every layer – from GPU kernels to model architectures – to reduce the amount of computation and improve GPU utilization.

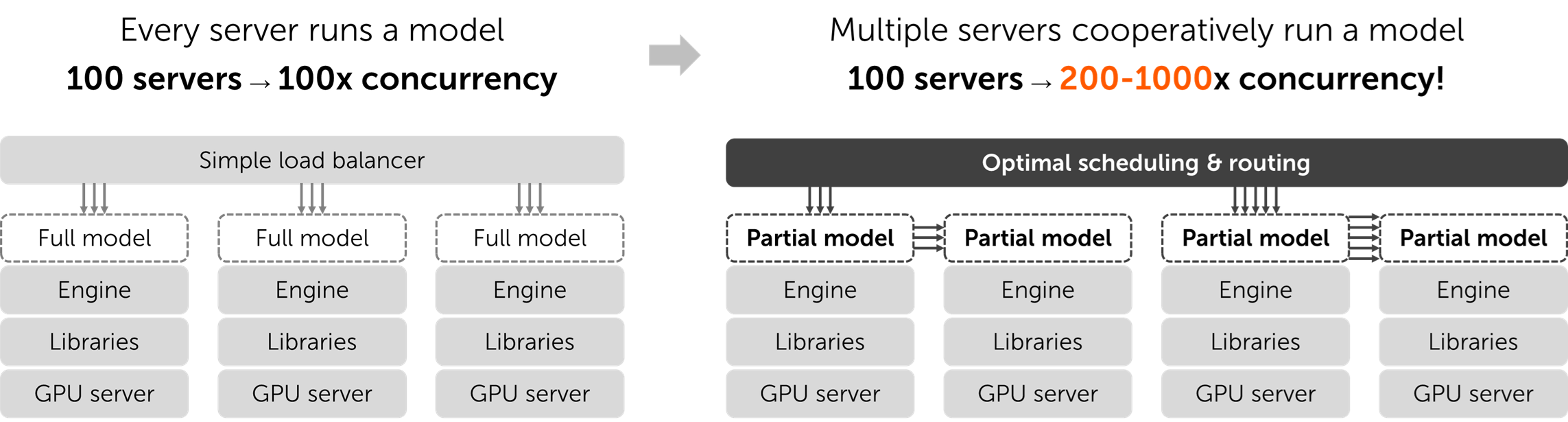

One of the most notable topics is distributed inference at the cluster level. In the past, inference optimization mainly focused on achieving good performance on a single GPU or a single node. At the cluster level, requests were simply distributed evenly across nodes using a load balancer. However, people are now realizing that how models and requests are distributed across multiple GPU nodes has a decisive impact on overall GPU utilization. By effectively applying distributed inference techniques such as disaggregation and smart routing, it is possible to increase the concurrency of a given infrastructure by 2-10x. This, in turn, significantly reduces the cost of AI services, i.e., dollars per token.

Why Distributed Inference Matters Now

The primary reason distributed inference has become important is the growing diversity of inference workloads that AI data centers must handle.

Foundation LLMs are becoming larger and more complex. In particular, as Mixture of Experts (MoE) models such as DeepSeek R1 and GPT-OSS become more widespread, model disaggregation has started to play a significant role in performance. DeepSeek has released their software architecture that serves its 671B MoE model at low cost through efficient cross-node Expert Parallelism (EP).

As applications requiring long-context inference – such as AI coding assistants – continue to grow, the sequence length of each incoming request has become highly variable. Prefill and decode phases, each with different performance characteristics, have both become critical factors in overall performance. This makes it increasingly important to allocate an appropriate number of GPUs to the prefill and decode phases, apply different parallelization and optimization strategies to each, and schedule requests differently for each phase. In addition, the KV cache hit ratio is now a critical factor in overall performance.

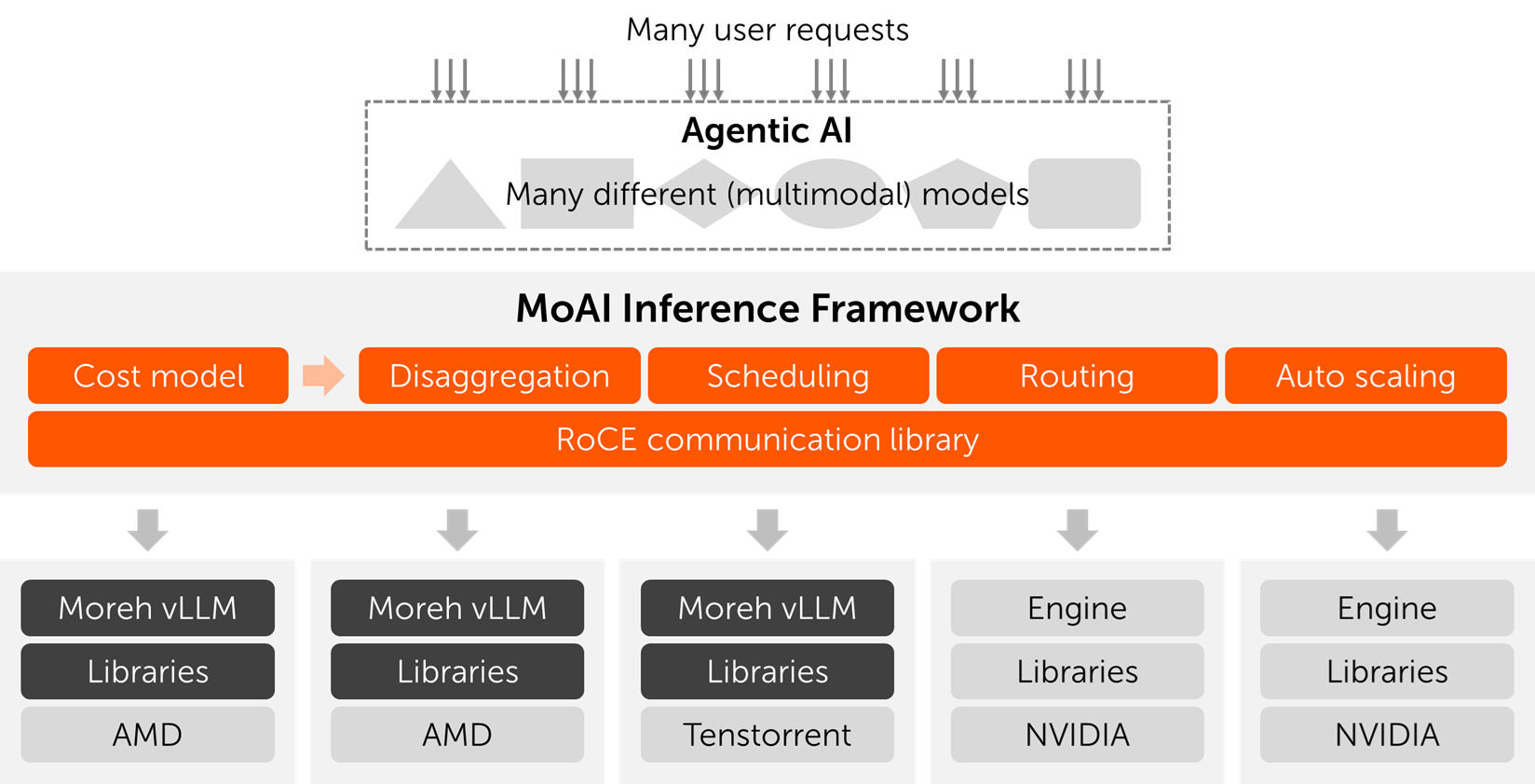

Finally, multi-model agent workflows – in which multiple multimodal models are dynamically invoked depending on the situation – are increasingly common. As a result, efficiently allocating GPUs across different models and ensuring service level objectives (SLOs) for a wide range of applications has become a challenging problem. Data centers face the difficult task of optimizing for conflicting metrics – such as throughput improvement, latency reduction, and fairness guarantees – while trying to understand the performance characteristics of complex GPU hardware.

MoAI Inference Framework

Distributed inference does not simply mean applying individual disaggregation and routing techniques, such as prefill-decode, expert parallelization, KV cache aware routing, and so on. The greater challenge lies in effectively combining multiple techniques to achieve meaningful performance improvements on a specific system. Even though many open-source projects now support individual distributed inference techniques, putting them together and deploying them in real infrastructure still relies on manual efforts.

As a solution, Moreh presents the MoAI Inference Framework. It is designed to enable efficient distributed inference on cluster systems composed not only of NVIDIA GPUs but also of AMD GPUs and Tenstorrent AI accelerators. Leveraging its unique cost model, the framework automatically identifies, applies, and dynamically adjusts the optimal ways of utilizing numerous accelerators in data centers. It delivers faster inference speeds, higher resource utilization, and greater cost-effectiveness simultaneously, even in today’s most complex AI workload environments.

Mixing Heterogeneous Accelerators in AI Data Centers

As a logical consequence of the growing importance of distributed inference, more AI data centers will seek to maximize overall computational efficiency by mixing different types of accelerators. This is only natural, since no single accelerator can be optimal for all diverse inference workloads. For example, the optimal accelerator may differ between the prefill and decode phases, between short input sequences and long input sequences, and between language models and video generation models.

NVIDIA’s recent announcement of the Rubin CPX also aligns with this trend. This chip emphasizes compute performance over memory bandwidth, adopting GDDR7 memory instead of HBM. Specifically, its memory bandwidth is only 2 TB/s – just 10% of the Rubin GPU (VR200), which offers 20.5 TB/s. However, its FP4 performance reaches 20.0 PFLOPS, about 60% of the Rubin GPU’s 33.3 PFLOPS.

Cluster systems such as NVIDIA’s Vera Rubin NVL144 CPX, which integrates both CPX and GPUs, can leverage these performance differences in a variety of ways. Most fundamentally, in LLM inference, compute-bound prefill phase can be executed on CPX while memory-bound decode phases run on GPUs – this is the use case that NVIDIA has disclosed for CPX. Beyond that, however, there are many other possibilities. For example, relatively compute-bound video generation models can be executed on CPX while memory-bound language models run on GPUs. Or, within a single model, one could image offloading FFN layers to CPX while running Attention layers on GPUs, extending the Attention-FFN disaggregation technique. Another approach might be to rely primarily on GPUs for small batch sizes but allocate more work to CPX as the batch size increases.

Beyond the combination of NVIDIA’s GPU and CPX, many other configurations for building heterogeneous clusters can be considered. For example, it is common practice in large-scale data centers to mix two different generations of NVIDIA GPUs. Another option is to combine NVIDIA GPUs with AMD GPUs. This not only helps prevent lock-in to a single hardware vendor, but also leverages the fact that AMD GPUs typically deliver better performance than same-generation NVIDIA GPUs on memory-bound workloads. Furthermore, GPUs can also be mixed with Tenstorrent AI accelerators. The Tenstorrent Wormhole and Blackhole processors use GDDR6 memory and, similar to CPX, are well suited for compute-bound workloads.

Software Challenges in Distributed Inference on Heterogeneous Accelerators

However, realizing this in practice comes with significant software challenges. Computation must be sufficiently optimized for different accelerator architectures. High-bandwidth, low-latency communication must be enabled across heterogeneous accelerators. While RDMA communication between devices from different vendors is physically possible, it faces many software-level hurdles.

Most importantly, the biggest challenge lies in efficient model disaggregation, workload distribution, and scaling across heterogeneous accelerators. Simply splitting two workloads between two accelerator types is not enough to achieve true efficiency. For example, CPX and GPUs are installed in a 2:1 ratio in the Vera Rubin NVL144 cluster system. But the ratio between prefill and decode phases is neither fixed nor guaranteed to be exactly 2 CPX : 1 GPU. Without dynamic resource allocation at the software level, idle resources inevitably arise. The problem becomes even more complex when multiple multimodal models must be served simultaneously.

The MoAI Inference Framework demonstrates its full value in heterogeneous accelerator environments. With automated distributed inference based on the cost model, it can dynamically and effectively utilize heterogeneous accelerators together. The framework also includes a communication library that enables RDMA communication across heterogeneous vendor hardware connected via RoCE networks. The Moreh vLLM is the backend of the MoAI Inference Framework and integrates library- and model-level optimizations for AMD GPUs and Tenstorrent AI accelerators, unlocking their full potential and enabling performance on par with, or even superior to, that of NVIDIA GPUs.

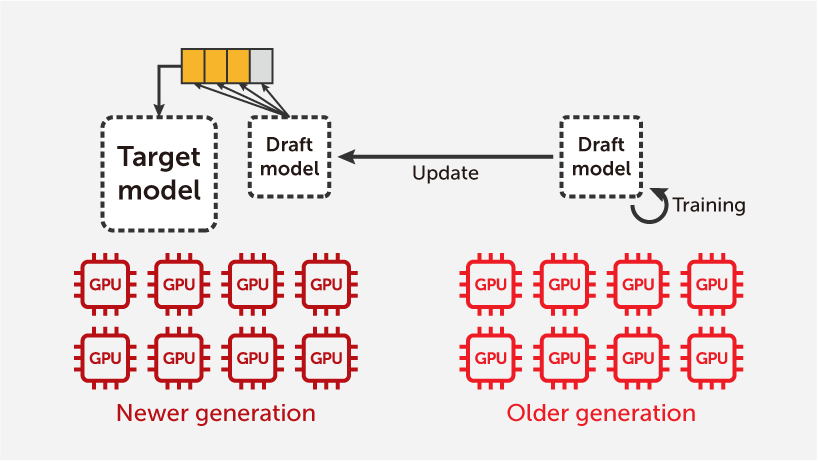

Case Study: Disaggregation Between AMD MI300X and MI308X GPUs

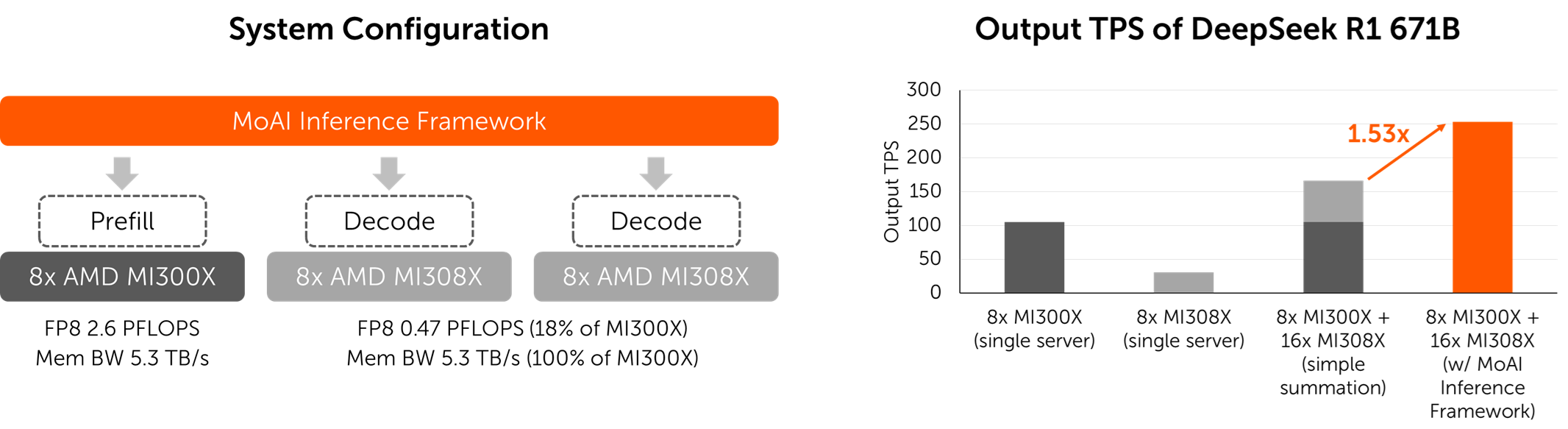

We demonstrate a real-world case of applying distributed inference across heterogeneous accelerators using the MoAI Inference Framework.

AMD’s MI308X GPU is a variation of the original MI300X GPU. While the MI308X’s memory bandwidth is the same as the MI300X at 5.3 TB/s, its FP8 compute performance is only 0.47 PFLOPS – just 18% of the MI300X’s 2.6 PFLOPS. Similar to the relationship between GPUs and CPX, this performance difference makes the MI300X more advantageous for compute-bound prefill phases, while the MI308X is more efficient for memory-bound decode phases.

We applied prefill-decode disaggregation with the MoAI Inference Framework on a cluster consisting of one MI300X server (8 GPUs each) and two MI308X servers (8 GPUs each). When running the DeepSeek R1 671B model end-to-end without disaggregation, the MI300X server achieved an output throughput of 105.16 tokens/sec, whereas the MI308X server reached 30.42 tokens/sec. If these servers were simply connected with a load balancer, the total output throughput of the cluster would have been only 166.00 tokens/sec. However, by separately executing the prefill phase on the MI300X server and the decode phase on the MI308X servers, the total output throughput increases to 253.59 tokens/sec, representing an improvement of about 53%.

Conclusion

The advent of the multimodal and Agentic AI era necessitates a fundamental rethinking of traditional single-model, single-server inference systems. At the same time, NVIDIA’s announcement of the Rubin CPX processor has drawn attention to distributed inference techniques that leverage heterogeneous accelerators according to their performance characteristics.

The MoAI Inference Framework is the best option for implementing distributed inference in real AI data centers. It delivers optimal performance on non-NVIDIA accelerators including AMD GPUs and Tenstorrent processors, and also automates the difficult and complex task of distributing (partial) models and workloads across heterogeneous accelerators.

If you’d like to see results beyond the case study here, or try the MoAI Inference Framework yourself, please contact us at contact@moreh.io.