December 26, 2025

SLOPE Engine improves long-context prefill performance by applying context parallelism across multiple GPU servers. This also helps efficiently utilize older-generation GPUs.

November 18, 2025

Moreh combine Tenstorrent’s lightweight and scalable hardware with our proprietary software stack to deliver an efficient and flexible solution for large-scale AI data centers.

November 13, 2025

Moreh demonstrated that DeepSeek-R1 inference can be executed at a decoding throughput of >21,000 tokens/sec by implementing EP on the ROCm software stack.

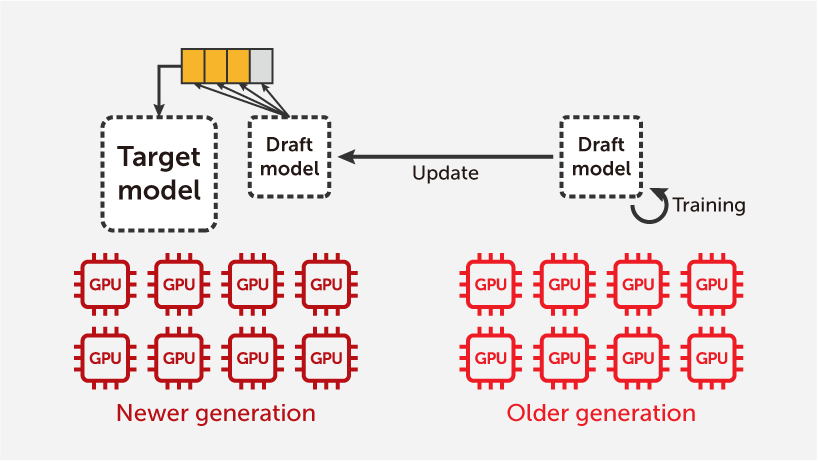

November 10, 2025

TIDE provides a method to optimize inference computation on newer GPUs by utilizing older or idle GPUs for runtime draft model training, resulting in better overall cost-performance at the system level.

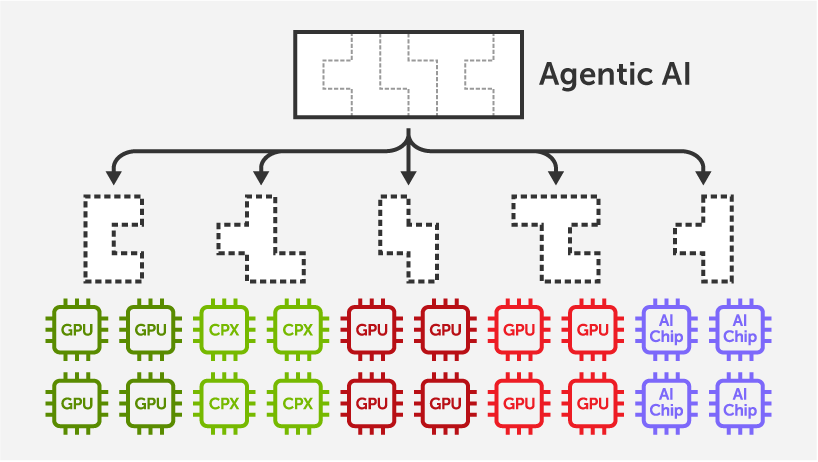

September 23, 2025

MoAI Inference Framework supports automatic and efficient distributed inference on heterogeneous accelerators such as AMD MI300X + MI308X and NVIDIA Rubin CPX + GPU.

August 30, 2025

Moreh vLLM achieves 1.68x higher output TPS, 2.02x lower TTFT, and 1.59x lower TPOT compared to the original vLLM for Meta's Llama 3.3 70B model.

August 29, 2025

Moreh vLLM achieves 1.68x higher output TPS, 1.75x lower TTFT, and 1.70x lower TPOT compared to the original vLLM for the DeepSeek V3/R1 671B model.

February 20, 2025

MoAI provides a PyTorch-compatible environment that makes LLM fine-tuning on hundreds of AMD GPUs super easy, including DeepSeek 671B MoE.

December 2, 2024

Moreh announces the release of Motif, a high-performance 102B Korean language model (LLM), which will be made available as an open-source model.

September 3, 2024

There are no barriers to fine-tune Llama 3.1 405B on the MoAI platform. The Moreh team has actually demonstrated fine-tuning on the model with 192 AMD GPUs.