February 20, 2025

MoAI provides a PyTorch-compatible environment that makes LLM fine-tuning on hundreds of AMD GPUs super easy, including DeepSeek 671B MoE.

January 28, 2025

Global Corporate Venturing — The startup that perhaps comes closest to DeepSeek’s approach is South Korea’s Moreh, which has created a software tool that allows users to build and optimise their own AI models using a more flexible, modular approach.

December 2, 2024

Moreh announces the release of Motif, a high-performance 102B Korean language model (LLM), which will be made available as an open-source model.

November 18, 2024

Joint R&D of AI data center solutions by integrating Tenstorrent's semiconductors with Moreh's software; Targeting NVIDIA dominant market with competitive solutions.

September 3, 2024

There are no barriers to fine-tune Llama 3.1 405B on the MoAI platform. The Moreh team has actually demonstrated fine-tuning on the model with 192 AMD GPUs.

August 19, 2024

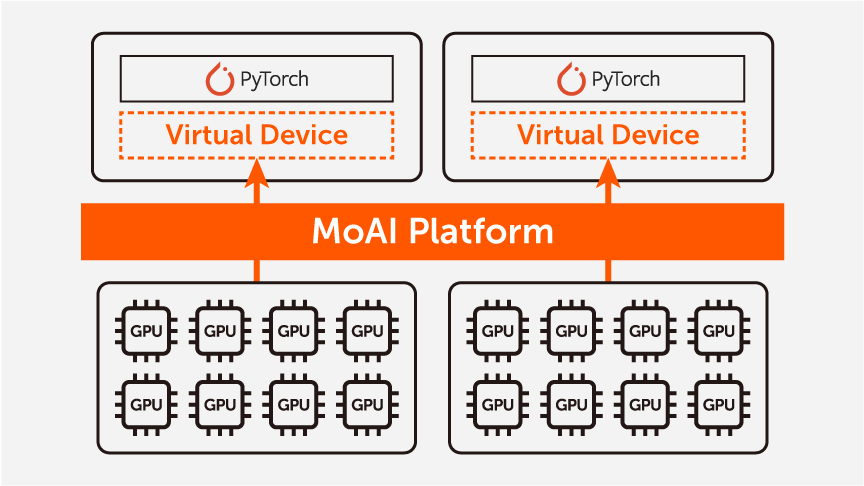

The MoAI platform provides comprehensive GPU virtualization including fine-grained resource allocation, multi-GPU scaling, and heterogeneous GPU support.

January 18, 2024

The Korea Economic Daily — South Korean artificial intelligence tech startup Moreh Inc. said on Thursday that its large language model (LLM) topped a performance assessment by global leading AI platform operator Hugging Face Inc.

October 26, 2023

TechCrunch — Advanced Micro Devices (AMD) and Korean telco KT are among the investors of Moreh, which builds an AI software tool that optimizes and creates AI models.

August 22, 2023

Moreh announced the successful completion of a monumental LLM (large language model) training project in collaboration with KT, a leading CSP in Korea.

August 14, 2023

Moreh trained a largest-ever Korean LLM with 221B parameters on top of the MoAI platform and an 1,200 AMD MI250 cluster system.