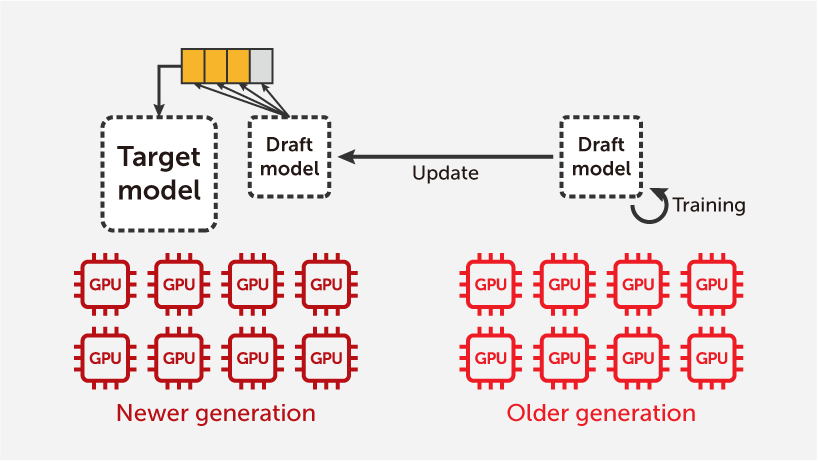

DeepSeek’s latest V3 and R1 models demonstrate performance on par with OpenAI’s GPT-4o and o1 model, getting significant attention in the AI industry. Notably, DeepSeek developed a 671-billion-parameter model at a low cost on a relatively small cluster of 2,048 NVIDIA H800 GPUs, instead of using NVIDIA’s mainstream high-end GPUs such as the H100 or B100/200. To maximize GPU infrastructure efficiency, DeepSeek optimized the entire software stack, from compute kernels running on individual GPUs to cluster-level framework and platform. Additionally, they devised a new reinforcement learning (RL) technique to enhance the model’s reasoning capabilities. Both DeepSeek V3 and R1 have been released as open source and are regarded as a remarkable contribution to the AI ecosystem.

If you want to adopt and utilize the DeepSeek V3 and R1 models in your data center, the MoAI platform is a must-have solution. As a part of its universal support for PyTorch and various AI models, MoAI enables efficient inference and fine-tuning of Mixture of Experts (MoE) architecture models with 671B parameters. More importantly, it facilitates efficient GPU clustering with alternative hardware, such as AMD MI200 and MI300 series GPUs — just as DeepSeek did.

Fine-Tuning Environment on the MoAI Platform

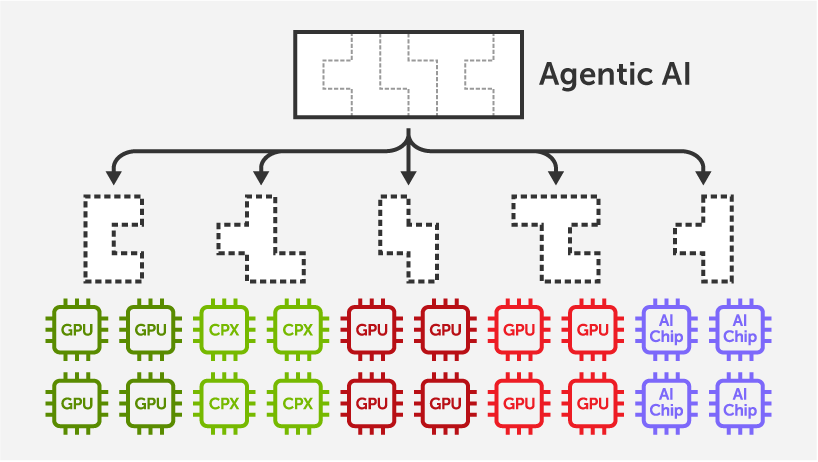

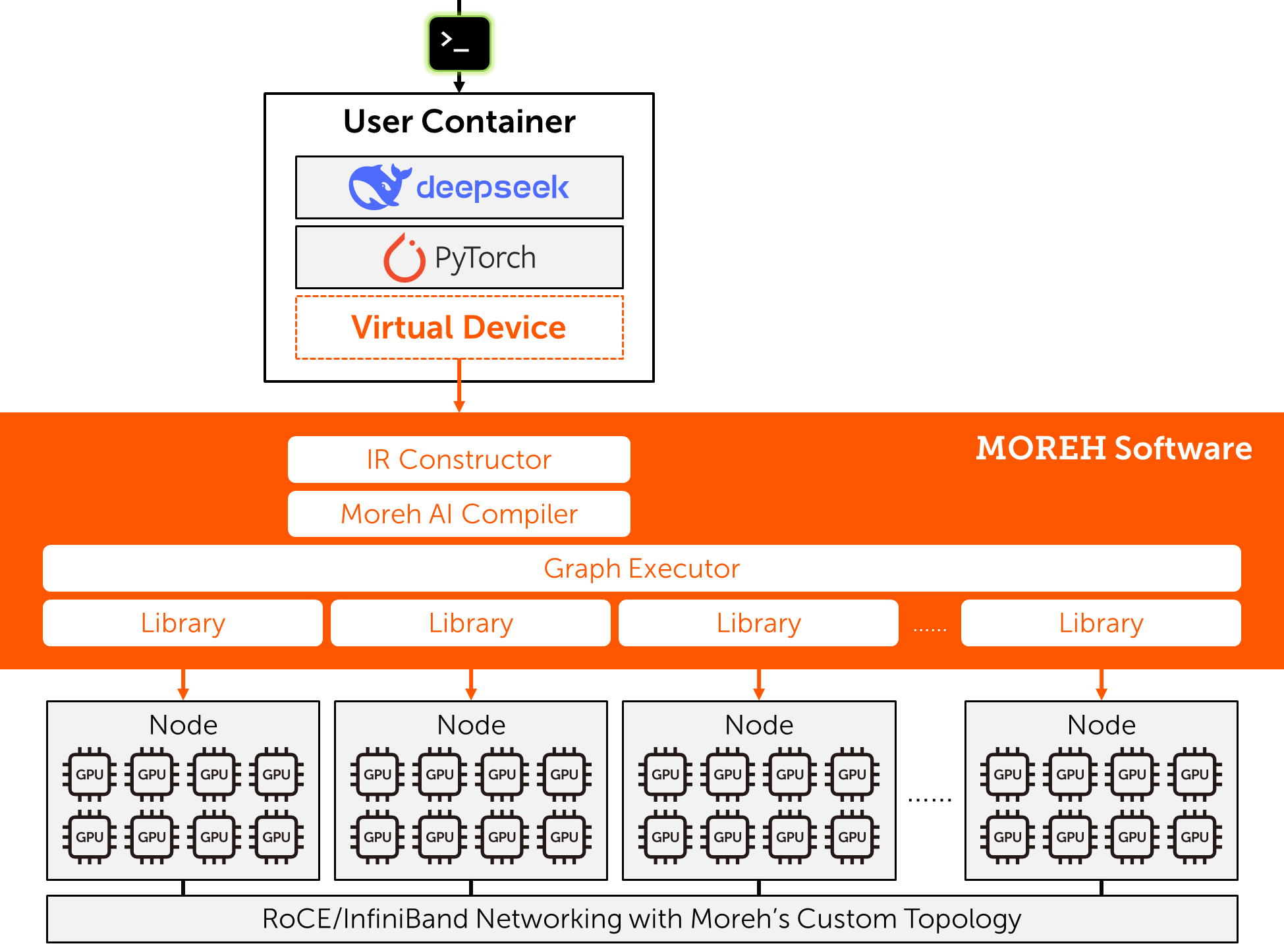

MoAI provides a PyTorch-compatible development environment that makes LLM training and fine-tuning super easy, of course, including DeepSeek’s 671B MoE model. To fine-tune DeepSeek V3 or R1 across hundreds to thousands of GPUs, all you need is to assume the presence of a massive single GPU, use the existing DeepSeek model implementation in PyTorch, write a simple training script in PyTorch, and disregard the underlying hardware architecture such as the number of GPUs and their interconnection. Then, our middleware automatically finds the optimal parallelization strategy, implements it on the target cluster, applies various performance optimizations such as operation fusion, and efficiently manages GPU memory.

With the MoAI platform, you can use cost-effective AMD’s data center GPUs such as MI300X, MI325X, and MI308X (a competitor to NVIDIA H100, H200, and H20, respectively), and configure the interconnection network with Moreh’s custom topology instead of a fat, fully non-blocking network between GPUs. This allows you to reduce costs and enhance the scalability of the infrastructure.

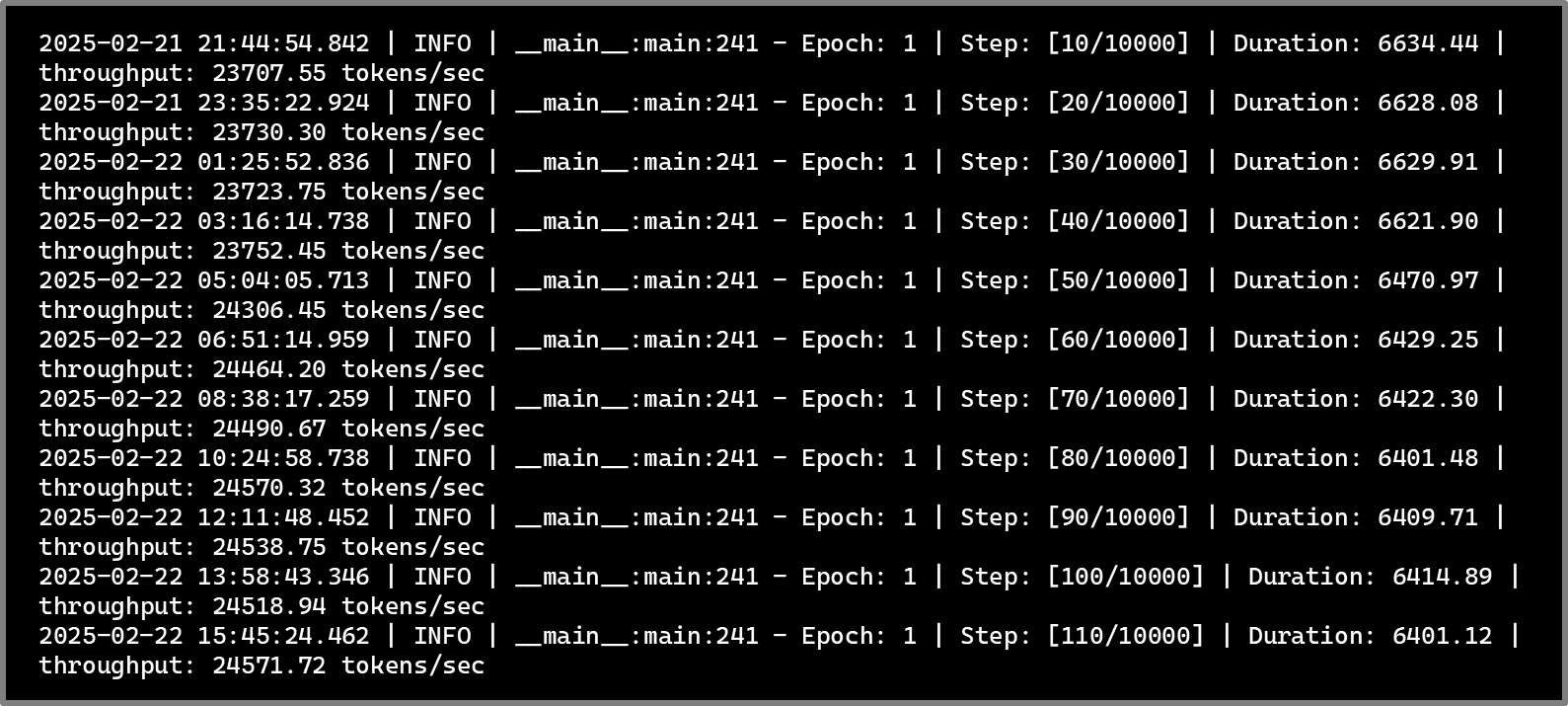

Experiment on 240 AMD MI250 GPUs

As a good example of the MoAI platform’s capabilities, our team tested the full fine-tuning of DeepSeek R1 on a cluster with 240 AMD MI250 GPUs. Similar to our previous post on Llama 3.1 405B fine-tuning, we took the DeepSeek R1 implementation from Hugging Face, wrote a fine-tuning script for the MoE architecture (including gate network training), and executed them on a virtual GPU. As a result, the full fine-tuning was performed with a throughput of ~24,500 tokens/sec.

- GPU servers: 2x AMD EPYC 7413, 4x AMD Instinct MI250, 512 GB main memory, 1x InfiniBand HDR NIC, Ubuntu 20.04.4 LTS 5.13.0-35-generic

- Host server (where a PyTorch process runs): 2x AMD EPYC 7543, 2 TB main memory, 4x InfiniBand HDR NIC, Ubuntu 20.04.4 LTS 5.13.0-35-generic

- Software: MoAI Framework 25.2.3037, PyTorch 2.1.0, Transformers 4.46.3, ROCm 5.3.3, UCX 1.10.0

- Model: DeepSeek-R1, BF16 parameters from unsloth

The AMD MI250 is a previous-generation model (released in November 2021), while AMD’s latest MI300 series GPUs offer larger memory capacities (192-256 GB per GPU) and support FP8 operations. Therefore, we expect 2-3 times higher per-GPU performance on clusters with MI300X, MI325X, or MI308X.

Conclusion and Future Work

One of the strengths of open-source LLMs is their ability to be easily specialized for various domains through fine-tuning. For models with >100B parameters, however, the challenges of GPU clustering usually become a major obstacle to fine-tuning. Moreh provides the most suitable AI infrastructure solution for customers looking to leverage DeepSeek V3 and R1, flexibly supporting the entire process from fine-tuning to deployment. This is achieved by combining an efficient AMD GPU-based hardware architecture with MoAI’s differentiated software technology.

This post introduced MoAI’s training and fine-tuning environment, but MoAI also excels in LLM inference. By integrating Moreh’s proprietary libraries and model optimizations into vLLM, it achieves higher performance (tokens/sec) compared to the original open-source vLLM. In the next blog post, we will introduce how MoAI accelerates DeepSeek V3 and R1 inference on an AMD MI300X/MI308X GPU server. We also hope to introduce real cases where the DeepSeek models have been optimized for specific domains through fine-tuning.