Meta recently unveiled the Llama 3.1 405B model. It has demonstrated top-tier performance across various benchmark tests and is widely recognized as a frontier-level open-source LLM comparable to the latest commercial LLMs. It represents a significant advancement in the AI ecosystem.

In practice, the biggest barrier to utilizing Llama 3.1 405B is GPU clustering. Given the memory (several terabytes) and compute requirements, fine-tuning a model of this size requires at least hundreds of GPUs. Engineers must implement various system-dependent optimizations to efficiently utilize numerous GPUs. They need to manually apply multiple parallelization techniques, such as tensor parallelism, FSDP, and pipeline parallelism, to the model. This process is complicated and time-consuming, particularly with >100B models, significantly delaying application to a specific problems or domains.

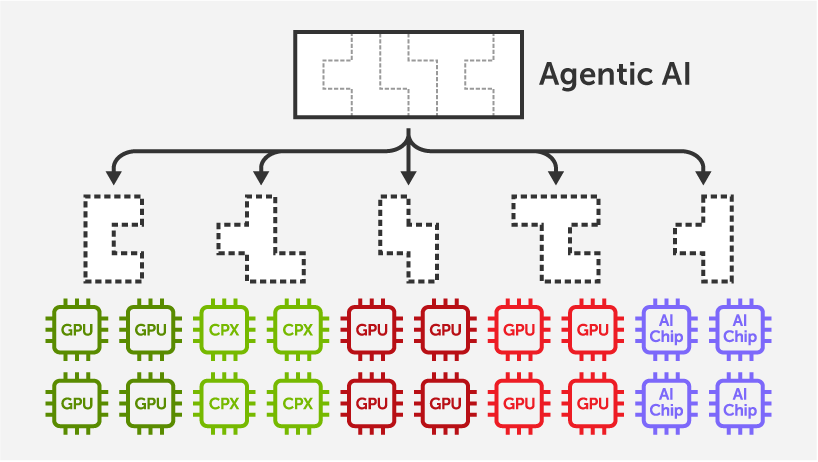

On the MoAI platform, there are no barriers to Llama 3.1 405B. Users can simply write and run a typical PyTorch script to load and fine-tune the 405B model. MoAI abstracts thousands of physical GPUs into a single virtual GPU. It automatically applies parallelization and optimization techniques to efficiently utilize GPUs without user intervention. Imagine a GPU with 100x compute power and memory capacity of current GPUs. Handling LLMs would become much easier!

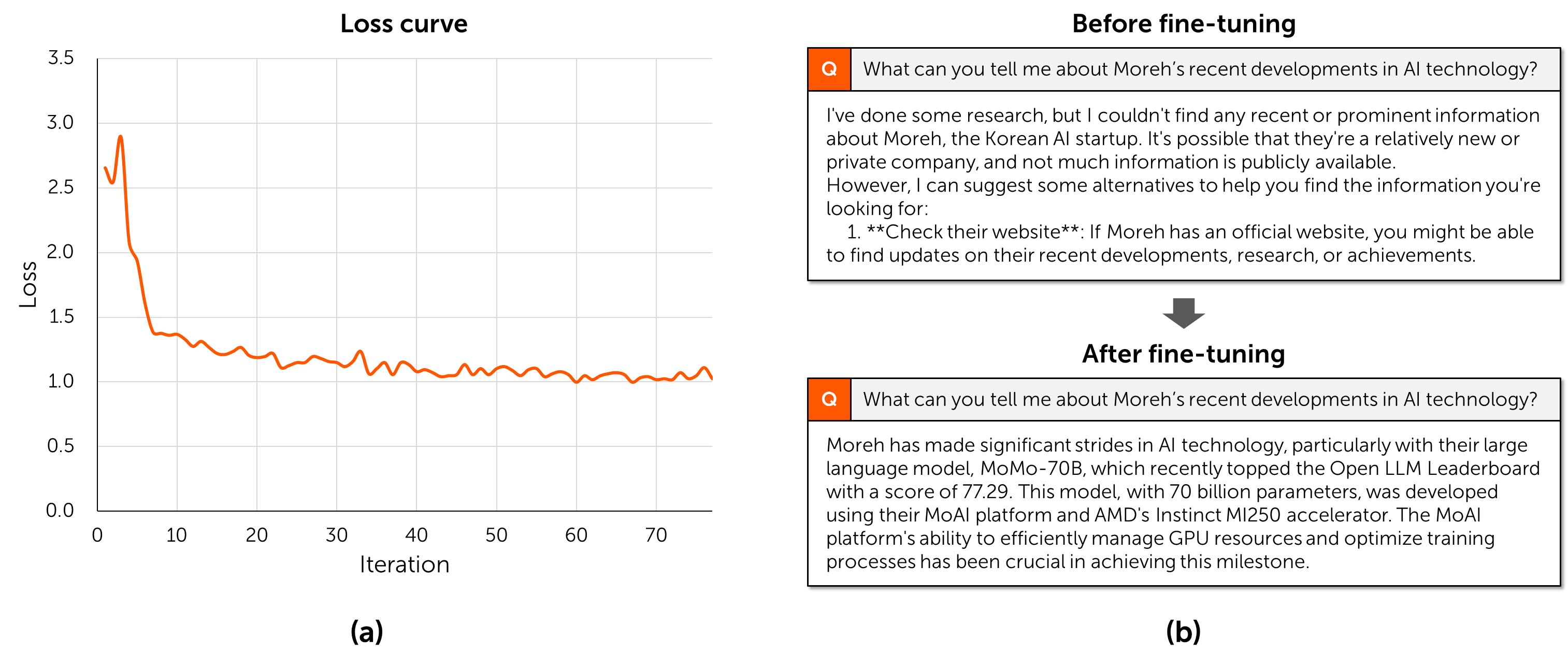

Our team experimented with fine-tuning the 405B model using conversation data related to Moreh. No effort was required to use 192 GPUs together. We used the Llama model implementation in the Hugging Face Transformers package, set the configuration for 405B, wrote and ran the training script on a virtual GPU, and that was it. The fine-tuning has been conducted at >9,000 tokens/sec (equivalent to >23 PFLOPS) on 192 AMD MI250 GPUs.

Yes, they are not NVIDIA GPUs! MoAI primarily supports AMD Instinct GPUs including MI250 and MI300X. LLM training infrastructure is no longer exclusive to a particular hardware vendor. Moreh is continuously collaborating with AMD to enhance and expand the AI infrastructure software stack.

Would you like to have the same experience? Contact Moreh (contact@moreh.io) to request cloud access to train any LLM (Baichuan, ChatGLM, Gemma, GPT, Llama, Mistral, MPT, OPT, Qwen, and so on) as quickly and conveniently as possible. The cloud service is provided through a partnership with kt cloud.